The last NTT DATA technology blog in the Kong Gateway series introduced the Kong AI Gateway, which is based on the API Gateway. One outstanding AI feature is the recently added Retrieval-Augmented Generation (RAG) capability, facilitated by the AI RAG Injector plugin that substantially minimizes the occurrence of hallucinations in large language model (LLM) responses. RAG effectively combines the strengths of LLMs with access to relevant, up-to-date information, yielding more accurate, contextually aware and informative responses. In this post, we will explore how seamlessly we can leverage this new AI plugin and the significant role that Spring AI can play in various RAG implementation options.

Overview

Retrieval-Augmented Generation (RAG) is an innovative approach to combine large language models (LLMs) with external knowledge retrieval systems to provide more accurate and specific answers. Knowledge retrieval systems are designed to find and extract relevant information from large datasets. LLMs available to the public, trained on fixed data sets for a set time period, can’t access the latest, special, or private information. This limitation makes them less useful in many business scenarios. RAG addresses this challenge by providing dedicated and latest information beyond the LLM’s training data cutoff date. Relevant data is retrieved and incorporated into the context window of the prompt, ensuring that the information provided is current and highly relevant to the user’s query, thereby reducing the likelihood of generating false or irrelevant information (so-called hallucinations). This approach is particularly valuable for applications that require precise and up-to-date information, such as customer support, research or decision-making use cases. Overall, RAG enhances the accuracy and benefit of AI-generated content by combining dynamic data retrieval with advanced large language generation capabilities.

RAG is straightforward to understand, but its implementation can become complex and challenging. It often involves integrating multiple new technologies and techniques, for which organizations may have limited experience. As the field of RAG continues to evolve, implementations are progressing from basic, naive approaches to more advanced and modular frameworks. So-called naive RAG solutions typically integrate generation and retrieval in a straightforward manner. They are easier and faster to implement, but lack flexibility, making it hard to adapt or improve individual RAG implementation components. In contrast, recent architectures emphasize modularity, enabling independent optimization of knowledge integration by having different pre- and post-retrieval components. For a more in-depth differentiation, I highly recommend reviewing this great foundational paper, which has influenced actual RAG framework implementations like Spring AI.

Using RAG within applications offers a significant competitive advantage and is expected to become a fundamental and essential competency for any organization leveraging generative AI, regardless of whether naive, advanced or modular RAG architectures are employed.

In the following sections, let us examine three different RAG approaches, where Kong Gateway collaborates with Spring AI to optimize GenAI output. Each approach represents a different level of integration, from calling external services to a most unified gateway approach. These strategies demonstrate how leveraging Kong’s AI capabilities alongside Spring AI can enhance operational effectiveness.

Before diving into the details, I want to note that vector databases are a common and popular choice for knowledge retrieval systems. They excel at semantic search by storing high-dimensional vector embeddings and performing vector similarity searches (VSS) using methods like cosine similarity, euclidean distance or dot product. For a more detailed explanation, I would like to highlight two great blog posts by Claudio Acquaviva, Kong Principal Architect. These blogs explain RAG in a Python LangChain team play and vector similarity search (VSS) with Redis in detail. Redis is a supported vector database for Kong AI Gateway’s RAG solutions, alongside Postgres PgVector. There are numerous sources covering the basics of RAG using embedding models, such as Claudio’s RAG blog in the introductory section and many others. Therefore, I won’t go into the basics here.

Approach 1: Pre-3.10 reality

Before Kong AI Gateway enterprise version 3.10, teams had no choice rather than to implement RAG functionality as external service or they had to write their own custom plugin. At that time, there was no AI RAG Injector plugin available in the Kong Plugin Hub catalogue. I consider the development of a RAG custom plugin to be much more complex than implementing an external RAG Service with the help of Spring AI which easily manages data ingestion and querying. For a clear separation of concerns, it is advisable to divide these functionalities into separate applications. One application for the (regular) ingest and another for querying the vector database.

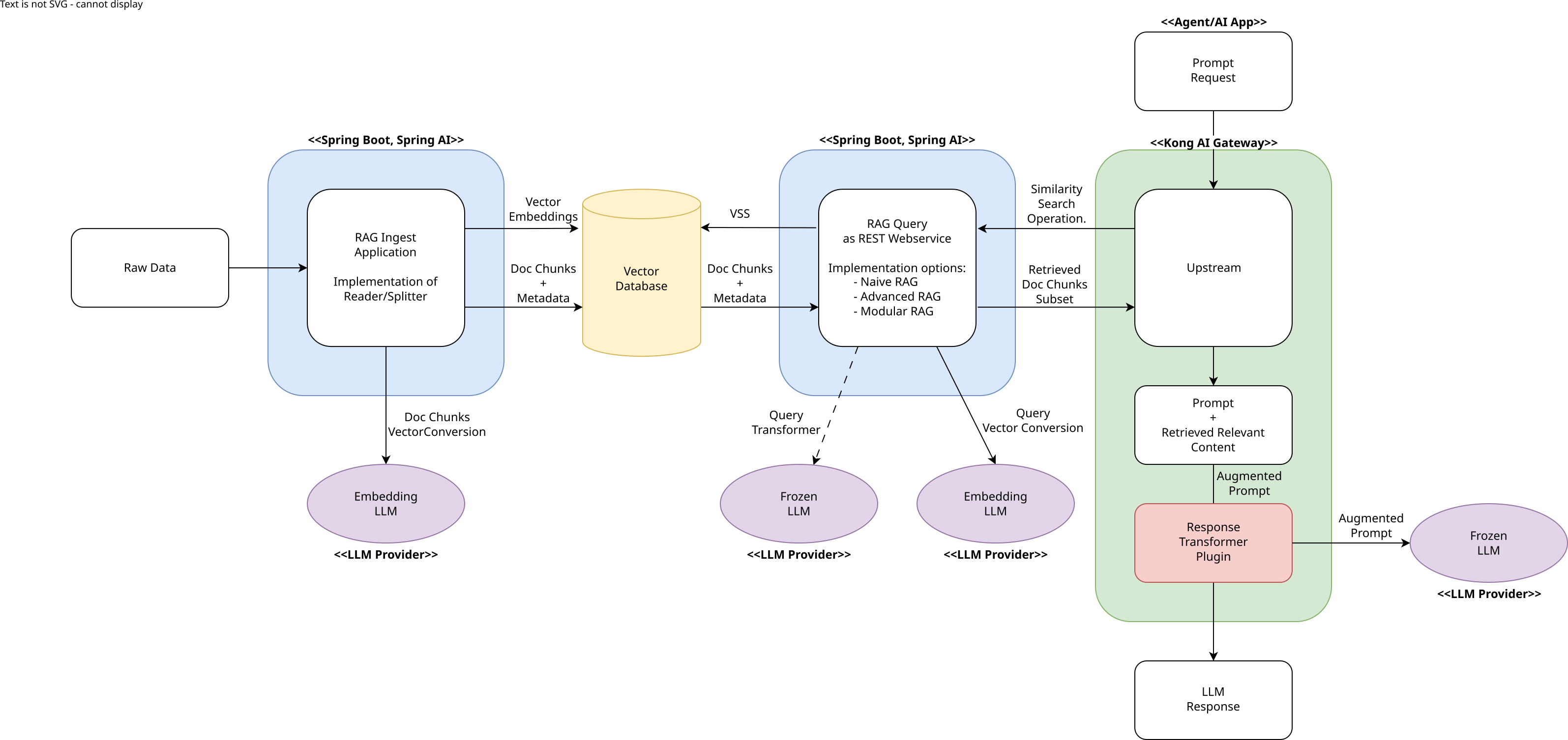

In my proof-of-concept implementation, as illustrated in the diagram, the similarity search operation is invoked through the configured Kong upstream service. The augmented LLM call is subsequently executed using the AI Response Transformer plugin. However, alternative approaches using the new Request Callout plugin together with the AI Proxy or AI Proxy Advanced plugin are also feasible.

While this approach detaches the RAG process from the gateway, it offers a significant advantage. Spring AI has begun to implement cutting-edge concepts of modular RAG, which can also be utilized in the VSS process. This includes pre-retrieval components designed to transform input queries, enhancing their effectiveness for retrieval tasks by addressing challenges such as poorly formed queries, ambiguous terms, complex vocabulary or unsupported languages. Additionally, post-processing components are employed for ranking strategies and to refine retrieved documents based on the query, addressing the need to reduce noise and redundancy in the retrieved information.

Today, this is not yet possible with the other two approaches utilizing the RAG Injector plugin, as it is based on a naive RAG approach. While this may not necessarily be a drawback, it should always be carefully evaluated within the specific context of the use case.

Approach 2: Hybrid transition

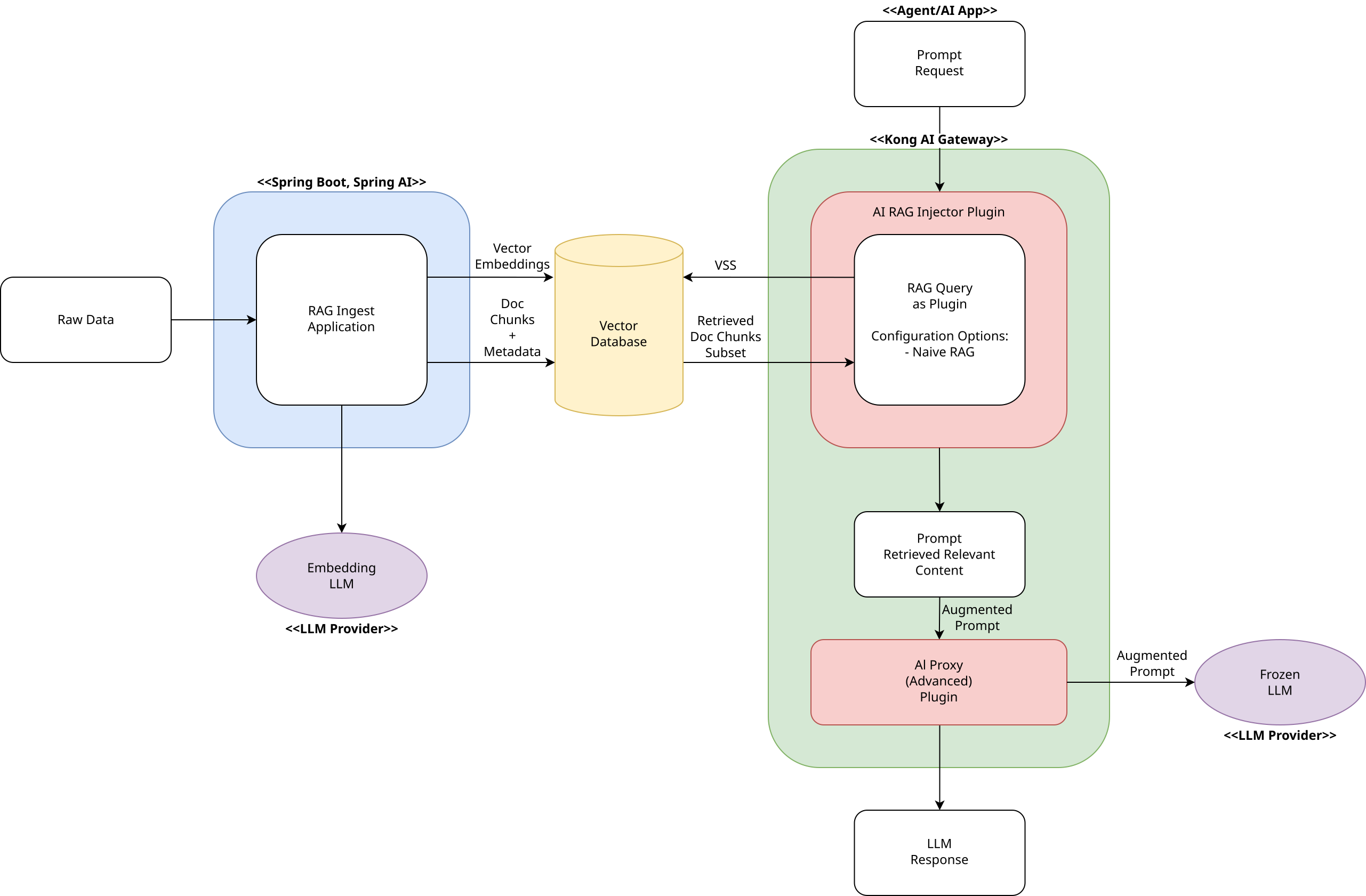

With the introduction of Kong’s RAG Injector plugin in version 3.10, teams gained the ability to handle RAG query processing at the gateway level while continuing to leverage Spring AI’s mature ingestion capabilities. This hybrid approach offers a later migration path if you are still running on an older Kong Gateway version. It preserves existing ingest investments while gaining gateway-level benefits for querying.

The primary challenge with this approach lies in properly configuring Spring AI to ensure that the Kong RAG plugin can access the vector embeddings for the VSS. The Spring vector database configuration for embeddings must be correctly aligned for Kong, used index name and prefix naming in addition to the used embedding model field name and dimension, as well as using the same content field name. To achieve this, it is necessary to move away from Spring AI’s opinionated defaults and instead employ a manual configuration approach.

Approach 3: Unified Kong Version

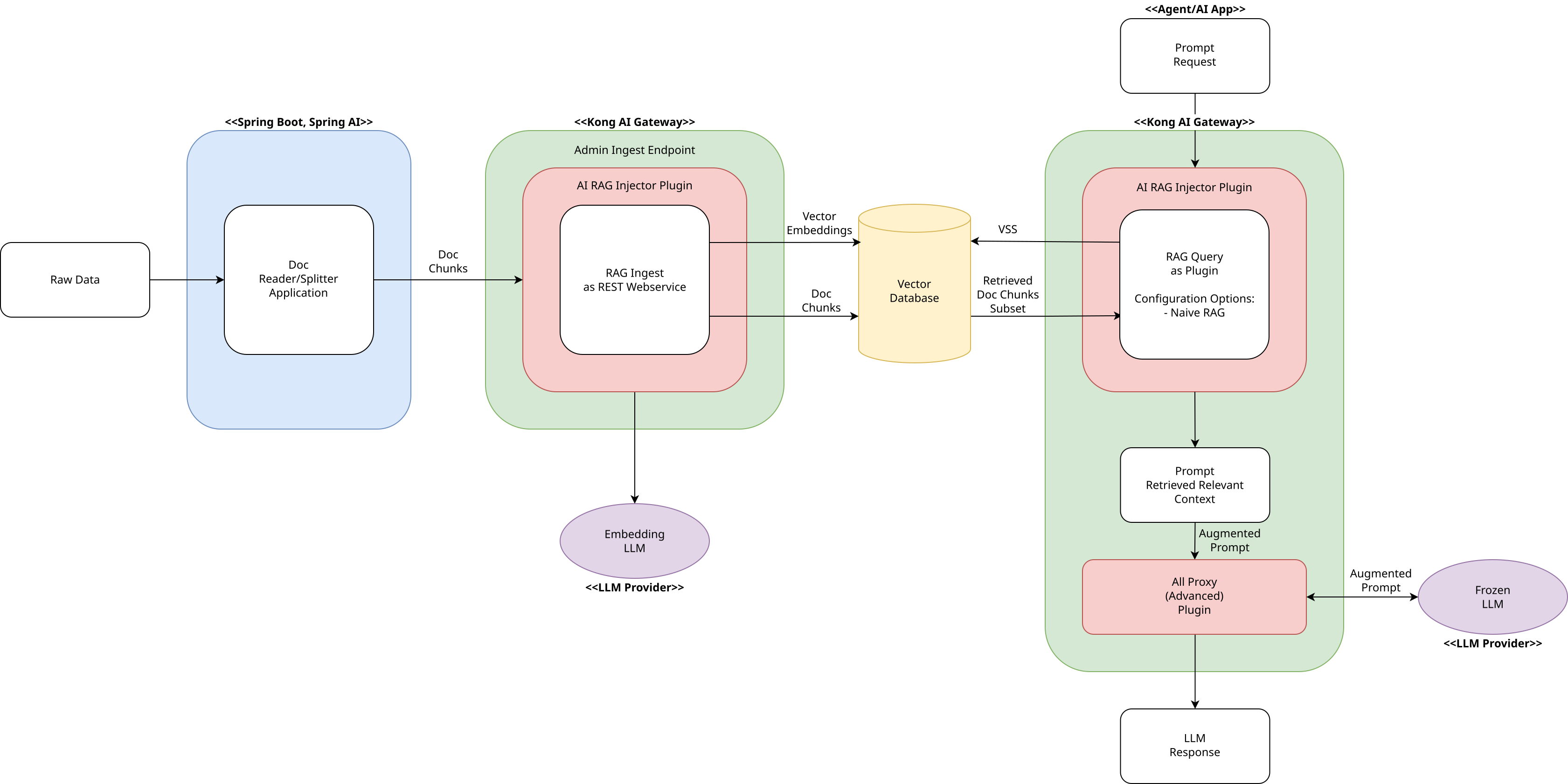

The final approach represents a most integrated solution where Kong’s RAG Inject plugin handles both vector database ingestion and querying, while Spring AI only reads and chunks data. Before feeding data to the AI Gateway, you have to split your raw data input into manageable chunks. The Kong documentation mentions the langchain_text_splitters for this purpose; however, I advise utilizing various Spring AI document readers and splitters for enhanced flexibility. Once the data is split up appropriately, you can send the pieces to the AI Gateway through the Kong Admin RAG Injector ingest API endpoint. This endpoint is automatically configured when you use the RAG Injector plugin. If required, this endpoint can also be configured to route through a standard Kong Gateway proxy, thereby avoiding the exposure of the Kong admin token. Other authentication and authorization methods can be used instead. This architecture minimizes external dependencies and maximizes operational efficiency.

The diagram illustrates that only the ingestion, document reading and splitting process is implemented via Spring AI. This setup abstracts the previously discussed complexities of configuring the vector database, simplifying the overall implementation. This implementation only covers the naive RAG approach, yet offers simplicity and stability in return. Modular RAG capabilities are currently available only with the first approach.

Conclusion

As more and more companies use retrieval-augmented generation solutions, the challenge of seamlessly integrating these capabilities into existing systems becomes more important. Python frameworks like LangChain and LlamaIndex, as well as Spring AI from the Spring ecosystem, offer a hand-made and more tedious way to create AI solutions, in contrast to the use of the Kong AI Gateway. There is overlap in functionality, but Kong provides a simpler, faster, most probably safer and more streamlined solution. However, particularly during the RAG ingestion phase, the capabilities of these frameworks can be leveraged to complement and enhance the overall process effectively. In traditional business environments, Java is a primary programming language. This provides Spring AI with a strategic advantage when implementing appropriate Kong AI Gateway additions, such as RAG components within the overall architecture. And as we have seen once again with the implementation of RAG, with Kong Gateway, you will not run into any limitations.

Credits

Title image by Holger Kleine on iStock