In our previous blog posts in the Kong Gateway series, we explored various security aspects, particularly focusing on token-related issues and their solutions involving both Kong and custom-built plugins. Today, we dive deeper into another coding variant within the gateway: Kong serverless functions. We will use a real-world “Who Responded?” example to demonstrate how effectively a root cause analysis (RCA) approach can be supported with minimal effort.

Overview

This technical blog is about serverless functions on the Kong Gateway and how we can use them to complement the functionality. In our previous blog posts, we discussed the use of Kong plugins to add functionality and the rationale for occasionally cloning or patching them. Throughout this Kong blog series, we have explored programming custom plugins that enable developers and administrators to add missing functionality. We have previously mentioned serverless functions in the Kong introduction blog and in the log chunking blog, although the focus there was primarily on providing a supplementary overview and on logging aspects.

So what are these Kong serverless functions all about?

Serverless function plugins have been available since the early Kong version 0.14, released in 2018. And the concise answer to what they’re all about can be found in the original announcement: “(serverless functions, A.N.) dynamically run Lua code without having to write a full-fledged (custom, A.N.) plugin. Lua code snippets can be uploaded via the Admin API and be executed during Kong’s access phase.”

In summary, if the code required isn’t extensive enough to justify a full-fledged plugin, you can opt for a serverless function that adds functionality with just a few lines of code. Kong refers to these as code snippets; in this context, they could also be called code nuggets - small, often reusable, self-contained functions or methods that perform a specific, defined task that Kong lacks in the product delivery.

Subsequently, Kong functions were also supported in the declarative DB-less version and can be configured using the Kong Manager UI, complementing the ability to upload Lua code snippets via the Admin API for execution during Kong’s access phase; we will also illustrate later how the serverless function can be described as a Custom Resource Definition (CRD) in a Kubernetes manifest. Furthermore, they have been extended to execute in all NGINX phases since version 2.1.0.

Kong serverless functions can be categorized into pre-functions and post-functions, each serving a distinct purpose in the API request and response lifecycle. Pre-functions are executed before the request reaches the upstream service, allowing users to validate, modify, or enhance incoming requests by performing tasks such as authentication, logging, or data transformation. In contrast, post-functions are executed after the upstream service has processed the request, but before the response is sent back to the client. They are useful for manipulating the response, logging output, or handling errors. The primary difference is in their timing and focus: pre-functions, which have the highest plugin execution priority (1000000), are used for preparing and controlling requests, while post-functions, which have the lowest priority (-1000), deal with responses and post-processing tasks.

The advantage of pre- and post-function is easier and faster code deployment, eliminating the need for boilerplate code. This allows teams to focus on pure functionality rather than diving into more complex custom development. However, it is more difficult to introduce configuration options (see also knowledge article number 2215), and the priority is predefined outside the access phase. As of version 3.0.0, Kong Gateway supports dynamic plugin ordering - even for serverless function plugins - during the access phase, allowing you to change a plugin’s static priority by specifying the order in which plugins are executed. Please note that starting with version 3.5.0, an Enterprise license is required to use dynamic plugin ordering, which may require switching back to custom plugins. Extensive processing in pre- or post-functions can impact performance, therefore it’s essential to keep the logic lightweight.

As of version 2.2.1, the serverless functions are executed in a protected sandbox. Kong added a sandbox to enhance security and isolation when executing arbitrary custom code. The sandbox environment prevents the pre- and post-functions to access sensitive gateway data and system resources, reducing the risk of vulnerabilities and ensuring that serverless functions operate safely within the Kong Gateway. This was implemented to offer users the flexibility to run custom code while maintaining a secure and stable API environment, enabling more reliable and controlled execution of serverless functions.

Practical function example, “Who responded?”

The Q in Q&A

It’s time to showcase a serverless function example that provides real value in the day-to-day operations of an API administrator or operator. Since the gateway typically operates in front of the service layer, most inquiries regarding errors are first directed to the API gateway staff. Typically, API clients reach out with a variety of questions to diagnose and resolve their issues.

Any root cause analysis (RCA) involves collecting and efficiently searching through log data to better understand a problem. This process includes gathering additional information, such as crash logs and application/server/gateway logs (usefully supported by solution), to establish evidence of the issue, its duration, and frequency.

However, the most pressing question is whether the error originated from the API gateway or any upstream service in the backend call chain, and who needs to be contacted. This information is crucial to help consumers determine the appropriate next steps for resolution and avoid delays in the problem resolution process.

How about providing API consumers with an indication of whether an HTTP error code was generated by the gateway itself or originated from an upstream service? The question to be answered is: “Who responded, the API Gateway or any upstream service?”.

The A in Q&A

This answer can be easily provided by a serverless function with just a few lines of code using the PDK function kong.response.get_source(). After deployment, the API staff will be spared unnecessary questions when it pertains to a service response or a deliberate exit response from the API gateway.

Here is the code example as a Kubernetes manifest of a post-function for a Kong Ingress Controller installation. The serverless code can also be configured via the Kong Manager UI, via an Admin REST API call, or in a declarative approach.

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: service-post-function

annotations:

kubernetes.io/ingress.class: kong

konghq.com/tags: service-post-function

plugin: post-function

config:

header_filter:

- |

return function()

local res_source = 'unknown'

if (kong.response.get_source()) then

res_source = kong.response.get_source()

if ((res_source=='exit') and

(kong.request.get_method()=='OPTIONS') and

(kong.response.get_status()==200)) then

res_source = 'intentional-exit'

end

end

kong.log.notice('X-GW-Response-Source: ',res_source)

kong.response.set_header('X-GW-Response-Source',res_source)

end

The variable res_source is initialized with the value ‘unknown’ and overwritten with the function output of get_source under normal circumstances. This function returns a string with three possible values:

- The term “exit” is returned if a call to kong.response.exit() was made at any point during request processing. This happens when the request is short-circuited by a plugin or by Kong itself, such as in the case of invalid credentials. Here, the upstream service was not accessed.

- The term “error” is returned when an error occurs during the processing of the request, such as a timeout while connecting to the upstream service. It indicates that an unexpected and unrecoverable error occurred during request processing.

- The term “service” is returned when the response is generated by successfully contacting the proxied upstream service, regardless of the HTTP response code returned by the service.

In 2022, I discovered a product bug that serverless functions and plugin crashes were not reported as an error. It was fixed in Enterprise version 2.8.1.3 (interested Kongers can read the details in support case SC00025886).

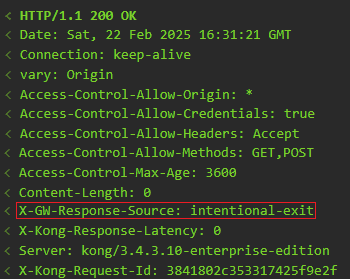

As you can see, I have further fleshed out the exit response with the help of the get_method() and get_status() function. There are certainly cases where an exit is intentional and not a problem. One scenario is the CORS plugin use, which causes an exit for OPTIONS preflight requests even if a 200 return code is issued. So it’s more of an intentional exit, that should be reflected back to the caller accordingly.

X-GW-Response-Source.

The response source information is added to the logs and additionally returned to the consumer via a response header X-GW-Response-Source. All that remains to be done is to inform the API consumers that they should take the X-GW-Response-Source return into account and, if necessary, contact the service owner directly. The API gateway team should only be contacted if the return values are “exit” or “error”.

Conclusion

Kong serverless functions are another excellent example of the many ways to extend Kong’s functionality as needed. We explored the powerful capabilities of serverless functions on the Kong Gateway, highlighting how they enhance the gateway’s functionality without the overhead of writing full-blown custom plugins. By allowing gateway staff to execute simple Lua code snippets, serverless functions enable quick and efficient deployment of desired custom functionality. We demonstrated a practical use case, a “Who responded?” serverless post-function executed in the header_filter phase, which helps API administrators diagnose gateway incident issues by indicating whether a response originated from an upstream service or the API gateway itself. And as we have seen once again with the implementation of serverless functions, with Kong Gateway you will not run into any limitations.

Credits

Title image by guvendemir on iStock