In our previous blog post, we discussed the topic of token validation in the context of the Zero-Trust Architecture (ZTA) principle ’never trust, always verify’. This time, I would like to introduce an architecture pattern for token handling that is particularly effective in environments that use multiple identity and access management (IAM) products. With this pattern, the API gateway acts as a benign token cloner, repairs claim defects and leads service provider to believe it is a token-issuing authorization server. This pattern has several advantages, which I will explain in detail.

Overview

This blog post is about token cloning on the Kong Gateway (see our introductory article here), which is also conceivable on other API gateways. More precisely, it is an architectural gateway pattern that could be referred to as ‘therapeutic token cloning’. ‘Therapeutic token cloning’ is an apt metaphor to describe the process of copying access tokens, making necessary corrections, and thereafter re-signing them. This metaphor is based on the advanced concept of therapeutic cloning from the fields of biomedicine and genetic engineering, where clones are created to correct genetic defects. Similarly, therapeutic token cloning at the gateway involves duplicating, correcting, and then re-signing access tokens to make them functional and (more) secure. Fortunately, we can ignore the ethical and legal discussions about cloning in our consideration.

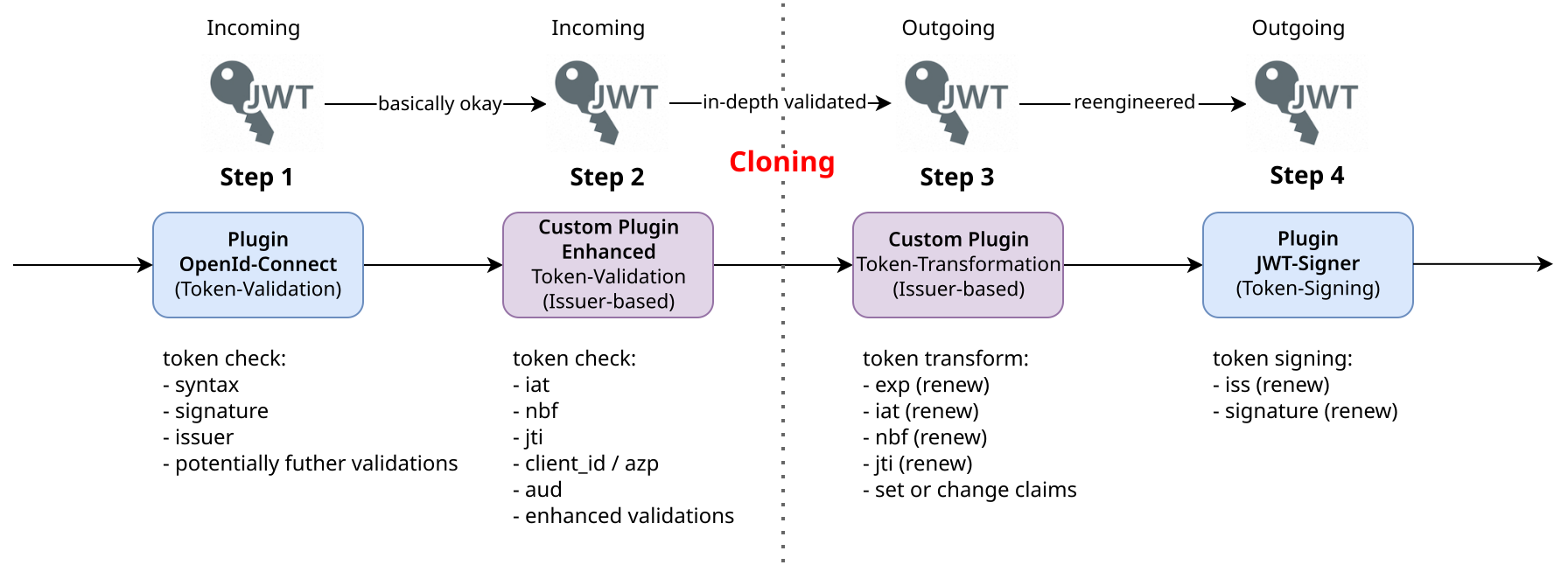

Step 1: At the beginning of the gateway processing pipeline, token validation of the incoming access token takes place. The insights from our previous blog post on fast token validation and the described Kong plugins will help us identify an optimal validation solution. The Kong Enterprise openid-connect plugin offers good validation options as it can validate the issuer (parameter issuers_allowed), the expiration time claim (exp) and the signature of the token issuer. As described in the previous blog, in addition to these basic verifications, the plugin offers further options for registered, public and even private claim validation. Additionally, it can handle JSON Web Key Sets (JWKS) endpoints from different IdPs whose public keys are trusted (parameter extra_jwks_uris). This is especially helpful in environments where access tokens originate from different IdPs.

Step 2: In the past, Kong’s default options were not sufficient for my extensive token validation requirements, especially for private claims, so I turned to custom plugins for advanced validation of input tokens. It is important to note that the plugins work together, i.e. the openid-connect plugin performs the basic validations, while a subsequent custom token validation plugin checks the various claims in detail depending on the issuer and its characteristics. Using the openid-connect plugin just makes sense, especially due to its built-in signature verification functionality, including (proxy-aware) collection and caching of public keys following a “key rediscovery when needed strategy”.

As mentioned in our previous blog, I recommend to follow the RFC 9068 standard (JSON Web Token Profile for OAuth 2.0 Access Tokens) and perform at least the recommended mandatory validations. The blog also mentions the phantom token pattern that can be used along with the cloning pattern.

Step 3: If the input token is validated (not malformed, not tampered, not expired, plus any extended validations), an identical token clone is created from the input token. All existing claims are transferred to the new access token. Since it is a new token, at least the timestamp (exp, iat, nbf) and unique identifier (jti) claims are renewed in a custom plugin. It is this special custom plugin that duplicates the input access token and then applies a set of pre-defined transformations or corrections to fix any issues or inconsistencies. Two separate custom plugins, one for validation and one for transformation, work well in practice because they can be put together like a construction kit or work individually. For example, I had a requirement that the input token should be checked in all details via the validating custom plugin, but then a silent authorization via a token exchange had to take place through a client credential grant flow at the gateway. In this case I skipped the token cloning und re-signing step.

On the cloned token, all “therapeutic measures” (to use the metaphor term) can generally be taken via the custom plugin. For example, in a specific use case, it was necessary to set the subject claim (sub) from an aggregated custom claim within a substructure for certain input tokens. In another example, for audience claims provided by Microsoft Entra ID using Client IDs, it was necessary to perform name resolution to unique recipient names. This ensured that each principal processing the JWT could identify itself with a string value in the audience claim as is common with other IdPs.

Step 4: After processing the new token, it is passed to the Kong enterprise jwt-signer plugin for re-signing. The plugin performs the re-signing work while also providing an endpoint for upstream services to retrieve the public key needed for the signature verification. The plugin also ensures that the signing token header information is updated together with the gateway issuer at the token payload.

Here is an architectural diagram showing the described gateway pattern.

The diagram shows the strict separation between the incoming and outgoing token processing using the token cloning approach. All claims can be retained, supplemented, or modified.

Details

As mentioned earlier, standard and custom plugins working together is beneficial for processing incoming tokens and generating outgoing tokens. This division of tasks between pairs of plugins that share the work optimizes efficiency and reduces the need for extensive programming within the custom plugins. Finally, during the fine-tuning phase, I developed custom issuer-based plugins for enhanced validation and cloning/transformation steps, using a set of Lua modules to cover the reused basic functionality. This approach ensured that the custom plugins remained compact and versatile. I assigned all these plugins to the service entity according to the type of incoming token issuer for appropriate processing.

Configuring the jwt-signer plugin can be somewhat complex, so here are some details about its crucial parameters. The token to be signed is passed to the jwt-signer plugin via a request temporary field in the header. Fortunately, the JWT-signer plugin allows you to configure any header name that contains the access token to be signed (access_token_request_header parameter). I deliberately avoided using the standard “Authorization” header name for this purpose to avoid any conflict or confusion with the incoming access token from the consumer. If for some reason a token clone has not been created (from the predecessor custom plugin, step 3 in the diagram), the jwt-signer plugin will intentionally fail because no token header has been passed. Processing of the incoming access token is mandatory and cannot be bypassed (access_token_optional: false). The static private key for the jwt-signer plugin used to sign tokens comes from a nginx server block that provides a secure local endpoint for the gateway plugin (that endpoint is only available within the Kong Pod and is not exposed to the outside world). This local endpoint for private key retrieval in jwks format is created during gateway startup and assigned to the access_token_keyset parameter. Additionally, the token gateway issuer URL must be configured by adding it to the iss claim of the token (parameter access_token_issuer). I configured a beautified URL (using the Kong Enterprise request-transformer-advanced plugin) at a loopback proxy endpoint (configured on the local admin endpoint) that points to the jwt-signer key endpoint from the gateway, replying with a standard JWKS endpoint response. As with the token input, a special header can be configured for the token output. To ensure seamless integration with backend services, the finalized and signed output access token is placed back into the standard Authorization header (access_token_upstream_header: Authorization: bearer).

My perspective on the Pros and Cons

One of the major advantages of this pattern is that it relieves upstream services of the need to manage key procurement and caching from multiple, potentially external, IdPs. Instead, upstream services trust the API gateway and obtain the public key exclusively from the (internal) gateway. From the services’ point of view, the gateway acts as a single trusted IdP. This approach increases stability and security, while simplifying security configuration for service providers.

Another unbeatable advantage is the ability to customize or extend the incoming access token intended for the services without any limitations (always consider: “with great power comes great responsibility”). This has proven to be very effective in practice when tokens from different IdPs, each with distinct claims, arrive at the gateway, are harmoniously processed and sent to the backend services as uniformly structured access tokens. A consistent token format reduces complexity and simplifies maintenance.

Using this pattern, it is possible to keep sensitive or detailed information within the system while sharing only necessary data in external tokens. This approach helps protect privacy by ensuring that only essential information is exposed to external services or at least that highly security-relevant information required by the services is deliberately omitted, reducing the risk of data leakage and the attack surface. Although this aspect is not the primary focus of the pattern, it is a beneficial side effect. In addition, external tokens with minimal information are simpler and easier to manage, reducing overhead and complexity.

A disadvantage is the custom plugin code that needs to be written and customized for each token issuer. However, with some expertise in Lua programming, this can be easily accomplished. Although the gateway adds latency compared to a token passthrough, which is a factor to consider, experience shows that the custom plugins typically execute within a few milliseconds.

Is therapeutic token cloning compatible with Zero-Trust Architecture (ZTA)? I believe it is, as the gateway is subject to the same rigorous security controls, continuous verification, and dynamic trust assessments as any other component within a ZTA. Importantly, the gateway must validate the original token’s integrity and the identity of the requester, which is assured with the pattern as described. To maintain zero-trust conformance, any modifications to claims, particularly authorization claims, must adhere to the principle of least privilege. When an API Gateway clones a token and re-signs it with its own key, it essentially reissues the token under its own authority. This underscores the ZTA API Gateway’s role in enforcing security policies, authenticating and authorizing requests, and managing access to resources.

Conclusion

Token processing is complex, but in my opinion it plays an important, if not central, role in a Zero-Trust Architecture. The gateway, acting as a gatekeeper, plays a crucial role in determining which “guests” are allowed to enter and which are not. Consider a metaphor that Mikey Cohen, former head of the Netflix Cloud Gateway team, once used in a talk: the gateway is a “bouncer at the club”. In addition to other checks such as client certificates with mTLS or API keys, access tokens are now essential for mature security measures. Different patterns of token processing are possible: token pass-through, using phantom tokens to switch from opaque to JWT tokens, silent token acquisition or renewal (see also my blogs on on-behalf-of token exchange and the SAML 2.0 bearer assertion flow for OAuth 2.0), or the presented “therapeutic” token cloning. Architectural tradeoffs are required, but the pattern presented here has worked well in practice for several years. The “therapeutic token cloning” pattern allows straightforward, gateway-centered and fast handling of different tokens arriving at the gateway from different internal and external IdPs. Most importantly, this pattern simplifies token processing for service providers; the complexity of token handling is managed at the outer boundary and not carried into the internal architecture.

And as we have seen once again with the implementation of a new architectural pattern on the Kong gateway, with Kong Gateway you will not run into any limitations.

Credits

Title image by Javidestock on Shutterstock