In the previous blog post, we discussed the topic of mutual transport layer security (mTLS) with consumer authentication even when perimeter security terminates incoming TLS communications. Now, we turn our attention towards implementing and enforcing security policies around the token validation process. Token validation is a fundamental responsibility of an API gateway and enables the implementation of a Zero-Trust Architecture (ZTA). In the context of the ’never trust, always verify’ core principle of ZTA, API gateways in particular have a unique responsibility and should perform an appropriate token validation process.

Overview - the external perspective

This technical blog is about token validation on the Kong Gateway. Token validation is one of the core tasks when implementing security policies in a ZTA, touching on the principles of ‘never trust, always verify’ and ‘inspect and log all traffic’. But before we get into the details of token validation, let’s take a quick look at the tokens used in OpenID Connect (OIDC) and OAuth 2.0, which are widely adopted standards used for authentication and authorization in modern architectures, especially in web applications with an underlying microservice architecture approach. The Internet Engineering Task Force (IETF) OAuth 2.0 core specification RFC 6749 defines several ways for a client to obtain tokens. These standards distinguish between identity, access, and refresh tokens.

An identity token (ID token for short) is always a JSON Web Token (JWT) that contains information about the identity of the authenticated user. A JWT is a by-value token, also known as a self-contained token. The token is a JSON string which is encoded but unencrypted by default. These tokens are used to verify the user’s identity and obtain profile information. The ID token, issued by an authorization server acting as an Identity Provider (IdP), is intended for the client application and provides information about the authenticated user.

An access token is typically also a JWT, although it can be an opaque or by-reference token. Interestingly, the underlying OAuth 2.0 standard does not define a specific format for access tokens (see RFC 6749, Access Token). When an opaque token is involved, it is typically a by-reference token. This token consists of a randomly generated string that identifies information in the token issuer’s data store. Unlike ID tokens, the access token is used to make authorized requests to protected resources on behalf of a user or client application. Thus, it’s about selectively restricting access to a resource. An access token is also called a bearer token because these tokens grant access to resources based on possession of the token itself. However, the token must still satisfy the authorization requirements to gain access to the requested resources. Therefore, access tokens contain or point to information about privileges and scopes.

Often, access tokens also contain identity information reducing the dependency on IdPs and allowing authorization decisions to be made in the additional identity context. Therefore, whether an access token contains so-called Personally Identifiable Information (PII) or not is a decision that depends on the security architecture and the requirements of the system.

Refresh tokens are long-lived tokens used to obtain new access tokens without requiring the user to re-authenticate. They are commonly used in OAuth 2.0 to obtain fresh access tokens. When an access token is paired with a refresh token, it facilitates the generation of short-lived access tokens without the need for frequent re-authentication. Configuring refresh token rotation is a best practice for regularly replacing refresh tokens to improve security. Both JWT and opaque token formats are possible.

A JWT has a compact, self-contained data structure and supports cryptographic signing which guarantees a tamper-proof token. The JSON Web Signature (JWS) standard describes the details of digital signing (RFC 7515). Self-contained means that all necessary information is contained within the JWT itself. Furthermore, a JWT can be encrypted using the JSON Web Encryption (JWE) standard (RFC 7516) for higher security requirements, but typically JWTs are only base64-encoded and not encrypted since they should be transported over an encrypted connection anyway (TLS communication).

However, the self-contained data structure allows offline validation without a verification call to the token issuer. Hence, there are two options to validate tokens: online and offline. Online validation involves verification with the help of the token issuer, while offline validation verifies locally without making a network call to the token issuer (hence the alternative term of local validation). A JWT access token can, while an opaque or reference access token must, be verified via online validation at the introspection endpoint (defined in its own token introspection specification, RFC 7662). Online validation at the token issuer verifies whether a token is well-formed, not tampered with and not expired or revoked. Additional token payload information can be returned to the caller for further verification.

This verification can also be outsourced to a specialized Externalized Authorization Management (EAM) system aka “Dynamic Authorization” approach. In technical terms and in our context, the API gateway acts as a Policy Enforcement Point (PEP) which calls a Policy Decision Point (PDP) executed by an EAM system. The Open Policy Agent (OPA) provides an open-source REGO-conform authorization engine that can act as an EAM. OPA is a policy engine moved to the Cloud Native Computing Foundation (CNCF) graduated maturity level on January 29, 2021. OPA offloads the policy decision-making process when dynamic and complex fine-grained access control policies need to be enforced at the API gateway or any other PEP. If desired, the Kong OPA plugin can route requests to an Open Policy Agent. At this point, I would like to refer to an interesting blog from the Kong engineering team, where the Phantom Token Approach which combines the usage of opaque and JWT tokens for special security reasons, is presented together with OPA.

However, please note that offline validation has tremendous advantages because of

- the reduced dependency on the token issuer (and, where appropriate, the OPA server) which results in improved robustness and reliability (temporary IdP and/or OPA slowdowns or even outages or any network failures will not affect the validation process),

- an improved performance because there is no latency from additional validation network calls, which also

- improves confidentiality by minimizing exposure to network-based attacks such as man-in-the-middle attacks or DNS spoofing, since the validation is performed locally only, and

- offline token validation can be more scalable, especially in distributed systems or microservices architectures, where numerous components may need to validate tokens simultaneously.

The drawback of not being notified about revoked tokens may be negligible and can be ignored in environments where tokens have short lifespans (e.g., between 5 and 15 minutes) and the impact of a compromised token is minimal (more about token revocation in RFC 7009).

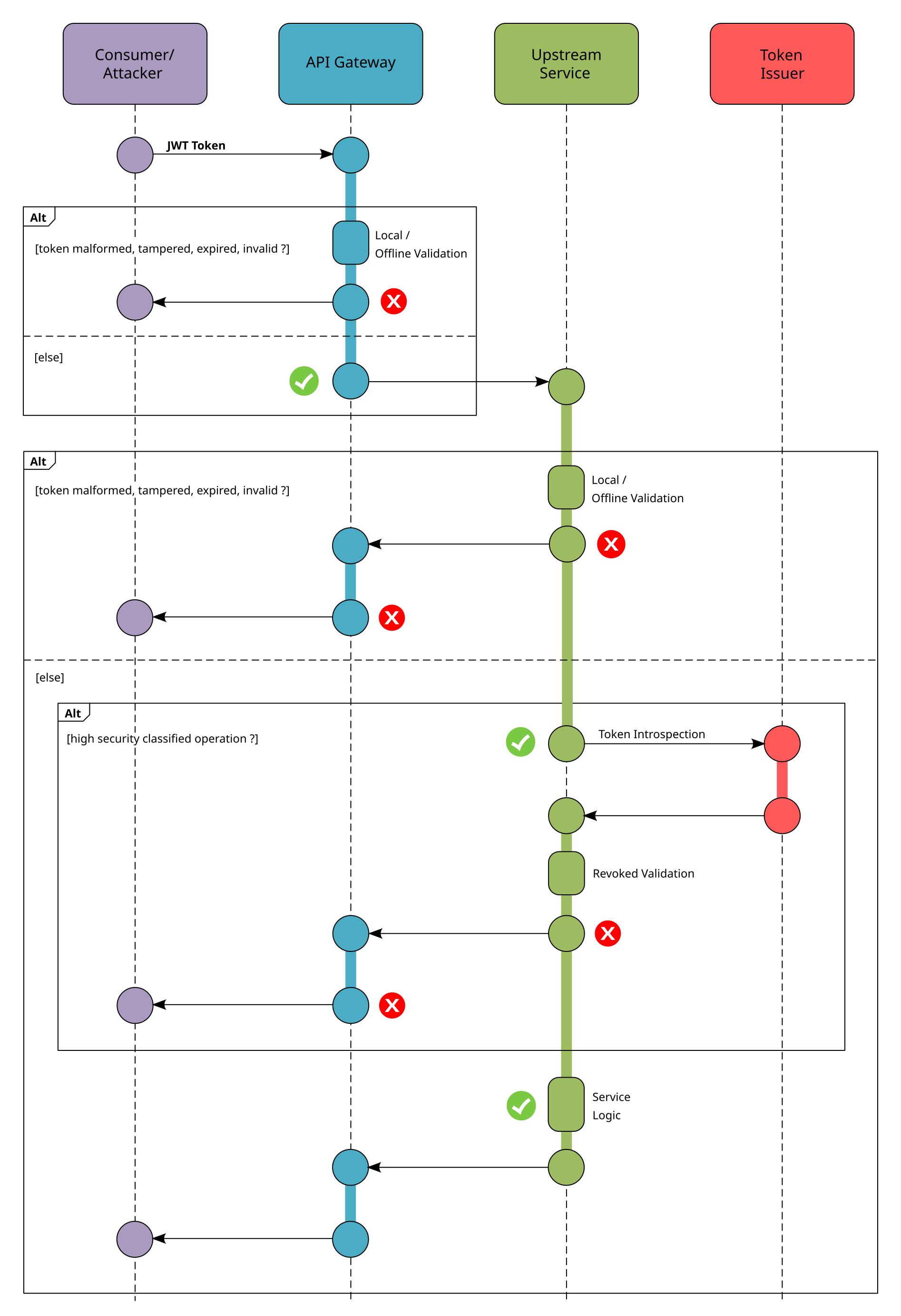

However, the two approaches of online and offline validation can also be used in combination in a ZTA architecture. A possible API gateway and service interaction with balanced token validation is also explained in the excellent Okta video by Aaron Parecki. I have illustrated the principle in the following diagram:

An API gateway must be a high-speed component making quick authorization decisions for countless invocations. If an API gateway is slow, it can become a bottleneck for the entire system and non-functional performance needs. Additionally, slower API gateways may require more instances or resources to handle the same amount of traffic, resulting in higher operational costs. The idea from the aforementioned Okta video for a combined approach is simple and effective: the gateway performs a fast, coarse-gained authorization validation while the services themselves decide whether a finer-grained authorization check with online validation (revoke token verification) - even with the help of an EAM - is necessary for critical and high security classified operations.

Thus, in the spirit of ZTA, each component in the communication chain independently verifies the access token, possibly to a different degree. In my opinion and for the reasons mentioned above, the API gateway should always carry out local/offline validations whenever possible and leave the fine-grained authorization validation to the services themselves. However, special circumstances may require special online validations at the gateway as well (but you get the basic idea).

Details - the inside perspective

JWTs consist of three main parts: a header, a payload, and a signature. The header specifies the type of token and the signing algorithm, the payload contains data, and the signature is used to verify the authenticity of the token. These parts are base64-encoded and concatenated with dots to form the JWT (header.payload.signature).

In the context of JWTs, pieces of information or data are transported using so-called claims in the context of JWTs (like assertions in SAML). These claims provide metadata about the JWT itself and any custom data that is relevant to the application (ID token) or for the secure invocation handling of protected resources (access token). Claims can be categorized as registered, public, or private, depending on their standardization and intended use.

Registered claims are predefined by the JWT specification (see IETF RFC 7519, Section 4.1). They play a crucial role in establishing the basic semantics of JWTs and enhance the security of the authentication and authorization process. The iss (issuer), exp (expiration time) and aud (audience) are essential for basic token validation. These claims ensure that the token was issued by a trusted party (iss), has not expired (exp), and is intended for consumption by the specified recipient (aud). According to the RFC standard, the aud claim can contain a list of audiences meaning that the security token is intended for multiple recipients. It provides a standardized way to handle multi-party authorization scenarios. Therefore, it is also possible to include intermediaries such as API gateways in the aud claim. The API gateway serves as an important authorization boundary and can validate both its own and the upstream recipient audience (ultimately a security architecture decision).

Public claims in JWTs are custom claims defined by the parties implementing the JWT. These claims are not standardized. However, commonly used public claims may be registered with the Internet Assigned Numbers Authority (IANA) in the JSON Web Token Claims Registry, providing a way to document and share their definitions with the community. For example, the claim registry will contain commonly used profile or user-specific information (claims such as family_name, preferred_name, or email claims). Some claims have a significant authorization meaning, such as client_id (client identifier), azp (authorized party), scope, roles and groups claims.

The client_id and azp claims are important because they identify the party that requested and authorized the access token. This helps prevent unauthorized access and ensures that the token was issued by a trusted party. The scope claim specifies an array of general privileges granted to the access token (certain scopes of actions). This facilitates the management of resource access by ensuring that only authorized actions (operations) are performed. In my point of view, that validation tends to be around fine-grained authorization. Also, the role and groups claims allow for fine-grained access control based on a user’s role or group membership. Users or applications only have access to the resources they need to do their job. For example, an access token with a “manager” role would have access to sensitive financial data, while a “customer representative” role would only have access to customer information and not sensitive financial data.

Private claims are additional information included in a security token, not defined in any existing specification, such as arbitrary user attributes or roles. These claims can be customized based on the specific needs of the application or system. This allows for greater flexibility and control over access to resources for the client and services. In my opinion an API gateway could do claim existence and syntactical pre-validations on these claims, but not deep semantic validation (ultimately a security architecture decision).

These days, however, the RFC 9068 standard (JSON Web Token Profile for OAuth 2.0 Access Tokens) provides clear guidelines on which claims must, should or may be included in a JWT access token. In my opinion, you should follow this standard; the claims listed in Section 2.2 are mandatory, while the optional authentication information claims are listed in Section 2.2.1. Other optional identity and authorization claim recommendations are listed in following Sections 2.2.2 and 2.2.3. Here are the access token payload claims classified as mandatory by the RFC 9068 specification.

| Claim Name | Claim Meaning | Claim Description | Reference |

|---|---|---|---|

iss |

issuer | Identifies the principal issuing the JWT | Section 4.1.1 of [RFC7519] |

exp |

expiration time | Identifies the expiration time on or after which the JWT must not be accepted for processing. | Section 4.1.4 of [RFC7519] |

iat |

issued at | Identifies the time the JWT was issued. Determines the age of the JWT. | Section 4.1.6 of [RFC7519] |

aud |

audiences | Identifies the recipients for whom the JWT is intended. Each principal intended to process the JWT MUST identify itself with a value in the audience claim. | Section 4.1.3 of [RFC7519] |

sub |

subject | Identifies the principal that is the subject of the JWT. | Section 4.1.2 of [RFC7519] |

jti |

JWT id | Provides a unique identifier for the JWT. The jti claim can be used to prevent the JWT from being replayed. |

Section 4.1.7 of [RFC7519] |

client_id |

client identifier | Provides the client identifier that requested the token (not a secret, unique to the authorization server). | Section 4.3 of [RFC8693] |

Other documents about JWT token validation include the IETF RFC 8725 specification (JSON Web Token Best Current Practices) and the highly recommended book “The JWT Handbook” by Sebastián E. Peyrott. Also take a look at the OWASP (Open Web Application Security Project) Top 10 API security risks, which points out the most critical security risks facing APIs. Several list items are related to tokens or token management.

Kong - token validation related plugins

Now that we understand the JWT details, we can look at the validation and implementation inside the Kong Gateway. As discussed, the correct way to prevent attacks is to only consider a token valid if both the signature and the content are valid. Therefore, we look at the standard Kong plugins that take both aspects into account. But if the standard plugins are not sufficient, writing your own custom plugins with additional validation logic is always a possible solution. From my previous blogs in the Kong series, where I also presented self-implemented custom plugins for various use cases, it is known that priority determines the order of plugin execution. Therefore, we will go through the relevant Kong plugins for token validation according to their predefined execution priority.

jwe-decrypt plugin: The first out-of-the-box plugin in the processing pipeline could be the jwe-decrypt plugin (priority 1999). This Kong plugin allows decrypting an encrypted incoming token in the request. The plugin works unspectacularly, taking the token, decrypting it, and passing it on for further processing. So, if you have to deal with JWE tokens, this is the solution provided by Kong to make offline validation possible.

outh2-introspection plugin: The next Kong plugin in priority order is the outh2-introspection plugin (priority 1700). The plugin enables the discussed online validation by the token issuer (the third-party OAuth 2.0 server) through the standardized server introspection endpoint. Authentication of a Kong consumer is optionally facilitated, and the registered claims can be proxied to the upstream service. As with other authentication plugins in the Kong plugin portfolio, the OAuth2 introspection plugin seamlessly manages anonymous users.

jwt plugin: The next Kong plugin in line is the jwt plugin (priority 1450). The plugin checks signed incoming tokens, verifies the token’s signature, and can check the registered claims exp (expiration time) and nbf (not before). The plugin is primarily used to authenticate consumers via a token claim to be defined (default is the iss claim), which contains a JWT credential key and refers to a RSA public key or secret for token validation. The optional anonymous configuration parameter allows in the event of a failed authentication that the request is not terminated and an anonymous consumer is determined. The use of the plugin is explained in detail in a Kong video. Note that there are some special requirements for decK and Kong Ingress Controller users mentioned in the plugin documentation.

openid-connect plugin: The openid-connect plugin (priority 1050) is also able to check the token signature, along with the iss and exp claims (default behavior). This plugin has the most token validation options, although it does not have a fully-fledged validation engine. The plugin parameter issuers_allowed prevents tokens from unknown issuers from being processed. The exp claim check allows some configurable leeway in expiration verification. The verify_claims parameter allows for “standard” claim validation (unfortunately, at this point the Kong documentation is not sufficiently detailed to allow a quick and trouble-free configuration). But I found out from Kong support and own research that at least the following claims can be verified (for completeness, sometimes with repeated explanation):

alg, algorithm, cryptographic algorithm used to sign the token.iss, issuer, identifies the principal issuing the JWT, see also RFC 9068 mandatory access token claims.sub, subject, identifies the principal that is the subject of the JWT, see also RFC 9068 mandatory access token claims.aud, audiences, identifies the recipients for whom the JWT is intended. Each principal intended to process the JWT MUST identify itself with a value in the audience claim, see also RFC 9068 mandatory access token claims.azp, authorized party, identifies the party that requested and authorized the access token.exp, expiration time, identifies the expiration epoch time on or after which the JWT must not be accepted for processing.iat, issued at, identifies the epoch time the JWT was issued. Determines the age of the JWT.nbf, not before, defines the earliest epoch time at which the JWT should start being considered valid, indicating the token’s readiness for processing.auth_time, authentication time, represents the epoch time when the user authentication occured. Useful for security policies which require a certain freshness of authentication.hd, hosted domain, used in Google’s authentication, specifies the hosted domain of the user’s Google account (typically the domain associated with the email address)at_hash, access token hash, the hash of the access token used to mitigate token substitution attacks.c_hash, code hash, the hash of the authorization code used to mitigate code interception or substitution attacks.

Additionally, there are several configuration properties in the plugin that allow you to check a specific claim for a specific value. In each case this is done with two properties, one for the claim name and one for the value, e.g. roles_claim and roles_required. The names of these properties typically correspond with some functionality that has been added, such as OIDC Authenticated Group Mapping. This configuration approach also applies to the group claim, audience claim, and scope claim.

Let us walk through the configuration approach using the roles claim as a guide and provide an example what the AND/OR cases mentioned in the documentation are all about. The roles claim is a public claim and part of the IANA JSON Web Token Claims Registry, which references the IETF RFC 7643 specification, Section 4.1.2, which in turn reads:

“A list of roles for the user that collectively represent who the user is, e.g., “Student”, “Faculty”. No vocabulary or syntax is specified, although it is expected that a role value is a String or label representing a collection of entitlements. This value has no canonical types.”

You can define which claim in your tokens contains the roles if it differs from the roles naming convention, and then you can define with roles_required the claim values that you want to make mandatory for token verification to be successful. Here is an example of an aggregated claim where multiple pieces of information are aggregated into a single claim:

{

"user": {

"name": "karl",

"assignments": [ "student", "mathematics"]

}

}With a token that has this kind of structure, you would set "roles_claim": ["user", "assignments"] so that the openid-connect plugin tries to find the “assignments” subclaim under the “user” claim.

Once the openid-connect plugin knows where to read the roles value from (the "assignments" subclaim under "user" in the above example), "roles_required" can be used to check if the roles a token has are valid. Values separated by spaces must all be present (AND), and of the values separated by commas only one must be present (OR). For example with roles_required:

roles_required = [ "student mathematics", "administrators" ]The token sent to Kong must have both roles "student" AND "mathematics" OR the "administrators" value to be allowed access.

In this way, for roles, groups, audiences, and scopes, you can specify any claim name as either roles_claim, groups_claim, audience_claim, or scopes_claim, and any value you want to make sure exists in that claim in roles_required, groups_required, audience_required, or scopes_required. In other words, you can repurpose these parameters for other claims if you don’t necessarily want to verify “real” roles, groups, audiences, or scope claims.

The admin_claim and authenticated_groups_claim parameters were introduced to allow oidc group mapping when logging into the Kong Manager, so this is a very specific use case.

Regarding “introspection”, Kong determines whether to use introspection depending on what auth_methods you have defined. If you have only auth_methods: introspection then opaque tokens and JWT should be introspected assuming your IdP has an introspection endpoint. In other words, the auth methods configuration determines whether JWT validation is done online (auth_methods: introspection) or offline (auth-methods: bearer).

The plugin is also able to refresh access tokens if a refresh token is available.

jwt-signer plugin: The jwt-signer plugin (priority 1020) allows you to verify, sign, or re-sign tokens in a request. This plugin uses the same openid-connect library as the openid-connect plugin and allows similar verifications. Like the openid-connect plugin, the jwt-signer plugin offers several validation configuration options, but the main purpose of the plugin is to sign access tokens with the keys configured at the gateway, so that the upstream services can then validate those tokens. The plugin makes the Kong Gateway appear as a token issuer for upstream services, it exchanges the iss claim and also provides an endpoint for public key retrieval. If you just want to validate tokens and do not need to re-sign them for the upstream services to validate, then the JWT-signer plugin is probably not something you need to consider.

oauth2 plugin: The oauth2 authentication plugin turns Kong into an IdP for oauth2 tokens, i.e. it manages the token creation process, stores the tokens, and needs to handle refreshing tokens. The OAuth2 plugin does not work in db-less and hybrid mode, it only works in a traditional deployment architecture where each Kong node has access to the Postgres configuration database where the tokens are stored.

That’s it for JWT token handling on the Kong gateway using the standard means.

Conclusion

Token validation is a complex topic and plays an important role in ZTA. Architectural trade-offs are required, as we discovered with the topic of revocation and fine-grained authorization validation. As we have seen, Kong offers a lot of ready-made plugins around JWT and validation. If there are requirements that are not covered, you can always write custom plugins for further validation. To return to my oft-repeated statement, with Kong Gateway you will not run into any limitations.

Beyond words: Thanks to Johannes Franken and Andreas Bittorf for contributing their many years of experience in this area and challenging me to implement the full range of token validation. Special thanks also to Karl Kalckstein for his overview and in-depth details about the Kong plugins in the context of token validation.

Credits

Title image by simonkr on Getty Images