You cannot dive into Kubernetes metrics without stumbling across Prometheus. With ~50k GitHub stars, the 2nd ever hosted CNCF project is a long-time major player and an obvious go-to in the world of metrics tech stacks. The Prometheus community is huge, with lots of thoughts and situations already publicly discussed and prometheus-community, the GitHub organization dedicated to the community, providing unofficial yet very sophisticated and useful components, artifacts and best practices.

We have run Prometheus in our Kubernetes clusters, too. Like any piece of software, Prometheus has some drawbacks. For example, as clusters grow, the instances get huge. In our case, we are talking about managing an extra node group just for large AWS EC2 instances, as our usual, more cost-efficient instances don’t provide enough memory to host them. With this and a few other identified use-case specific issues, we wanted to give something else a try and see if it’s worth migrating away from Prometheus.

This is the beginning of a short series of blog posts. We take you along on our journey to replace Prometheus with OpenTelemetry. This first post is a general introduction to OpenTelemetry. If you like, you can skip to one of the other, more technical and practical parts:

What Is OpenTelemetry?

In platforms and operations teams, we usually talk about specific software: Did we install Grafana? Is fluent-bit running properly? Have you checked the velero backups? What’s the ArgoCD status? All of these applications offer a specific, (hopefully) well-designed feature set which is the reason we run them. And there is nothing wrong with that. However, there may come the time when we want to replace something. Maybe the application we use is discontinued or the licenses have changed. Or maybe there’s something better than what we currently deploy. In such situations, we often start from scratch, because the applications bring their own system they operate in. Custom APIs and protocols, custom formats, maybe some proprietary parts.

This scenario is one you won’t encounter when using OpenTelemetry. But why is that? OpenTelemetry has declared their mission to enable effective observability by making high-quality, portable telemetry ubiquitous, and their vision is to be easy, universal, vendor-neutral, loosely coupled and built-in. Now take a minute to think about how to achieve these with the we’re open-source, but please lock in and pay for our enterprise version approach. It’s pretty hard to be vendor-neutral and loosely coupled when your application is your main focus, isn’t it? That’s why OpenTelemetry is fundamentally different from most software we know. You can see this in the artifacts the OpenTelemetry project provides. Instead of a container and a helm chart, it consists of:

- OpenTelemetry Protocol (OTLP): OTLP is a specification which defines observability data in-flight between two systems. Basically a protobuf schema for gRPC, it defines how data is shared between systems, thus includes a data format.

- SDKs: In order to contribute applications to the OpenTelemetry community or to enable your proprietary software to benefit from it, official SDKs are released. They wrap the interactions with the OTLP in handlers for various languages. With these, you can build a completely new application for OpenTelemetry or just use them for instrumentation.

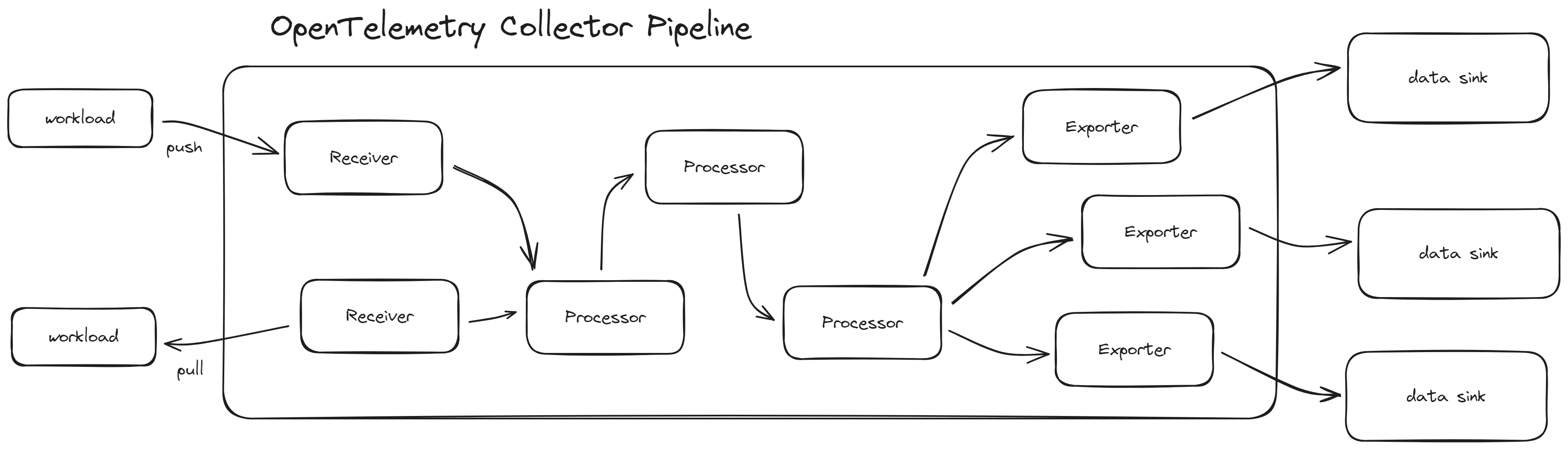

- OpenTelemetry Collector (OTelCol): This deployable application acts as a gateway between systems. By configuring pipelines with receivers, processors and exporters as building blocks, the collector can connect the dots of an observability stack and allows loose coupling.

- A few auxiliary artifacts like a helm chart and a Kubernetes operator.

Let’s take a moment to look at the OpenTelemetry Collector. While it’s totally optional to use, it offers to be the center of an observability stack by connecting different components as an additional layer of abstraction. To configure it for good use, you first leverage components to create building blocks.

- Receivers represent the inbound part - you could also think of it as the E in ETL. You can provide an API where other systems push to or let OTelCol actively scrape targets.

- Processors are the transforming pieces. They can do many different things, such as editing a label of a metric or controlling the batch throughput of the collector for performance optimization.

- Exporters do what their name implies: Exporting the received and processed data to any system supported by the chosen exporter.

To bring on the action, you combine these components as pipelines. You can have any number of pipelines, any numbers of components per pipeline and use a single component instance multiple times. Pipelines work in order, which may only be relevant for processors.

Fortunately, we don’t have to build these components from scratch. The open source community has an official repository where you can contribute to OpenTelemetry’s success: open-telemetry/opentelemetry-collector-contrib. Here, all the fantastic integration solutions are developed and shared. From this repository, they all are included in a container image. So, if you work with the official helm chart, you get all of it out of the box - write some YAML to configure it, done! FYI: There’s also the option to build your own image and include only the specific pieces you need. And remember: It’s still optional, you can configure your applications to directly push data to an OTLP-supporting system, if it suits your case.

As you might have already guess from this, integrations play a crucial role when implementing a metrics stack based on OpenTelemetry. Without at least one receiver and one exporter, the collector does not bring any value at all. Fortunately, OpenTelemetry has grown organically (we’ll talk about the history in a moment) and is backed by many big tech players. Just to name a few: There is AWS, Azure and GCP as hyperscalers, but also well-known observability tools like Grafana, Jaeger, Datadog, Dynatrace, New Relic and Splunk (see the official vendors list). There’s AWS Distro for OpenTelemetry, a collection of software specifically for using AWS services while observing those with OpenTelemetry. Datadog is publicly committing to OpenTelemetry, both by adding more support to its product and by contributing to the project.

Where Does It Come From?

So, where does OpenTelemetry’s remarkably different approach come from?

Distributed systems and observability have been a thing before Kubernetes and CNCF existed. In 2010, Google published a paper about Dapper, a tracing solution. In 2012, Twitter followed with publishing Zipkin, yet another distributed tracing solution. Kubernetes appeared 2014, Jaeger (guess what: distributed tracing) was introduced by Uber in 2015. That same year, Ben Sigelman, the founder of Lightstep, published a blog post, now also known as the OpenTracing manifesto. He postulates that instrumenting code is broken because it always comes with a vendor lock-in (i.e. the software defining how tracing works).

And there’s the rub: It is not reasonable to ask all OSS services and all OSS packages and all application-specific code to use a single tracing vendor; yet, if they don’t share a mechanism for trace description and propagation, the causal chain is broken and the traces are truncated, often severely. We need a single, standard mechanism to describe the behavior of our systems.- Ben Sigelman in Towards Turnkey Distributed TracingSince you typically want to trace not only your very own software but also its auxiliary applications in a stack, it’s critical that all these applications support exactly that one tracing vendor you chose. Thus, these open-source systems would have to support numerous different vendors which is just not realistic. So, talking about specific vendors is not good and the overall tracing has to be changed to a vendor-neutral approach.

This post was published right after the acceptance of his project OpenTracing as CNCF’s 3rd hosted project in 2015. Another Google turn: OpenCensus was released in 2017. It consisted of SDKs targeting not only traces but also metrics.

What does this have to do with OpenTelemetry? Well, it’s not an additional project in this list of projects, but instead, it is a merger of OpenTracing and OpenCensus that took place in 2019. Backed with lots of experience, battle-tested individuals, and a broad publicity, OpenTelemetry aimed to be the next major release for both of its predecessors, while OpenTracing became the second CNCF project to be archived. Although OpenTelemetry was accepted as a CNCF sandbox project in 2019 and as an incubating project in 2021, it is not just another newly grown open-source project with a questionable future and too much risk to take. From the beginning, it has been supported by CNCF, Google, Microsoft, Uber and others (announcement by CNCF, announcement by Google). So we expect it to stay and flourish.

Balancing Benefits and Costs

Circling back to the introduction: Every system has drawbacks. Benefits come at a cost or trade-off. OpenTelemetry promises abstraction. All of us have learnt loose coupling is desirable, but so is high cohesion. You have to decide for yourself, if you want to lock in to one technology and have a fairly simple stack of exactly that technology, or if you want to pay the price of higher complexity and a few more artifacts to gain some degrees of freedom with the additional layer of abstraction OpenTelemetry adds to your stack. As a platform team, operating about 80 AWS accounts and 30 Kubernetes clusters, we decided to opt for the more complex solution. It allows us

- to break apart lifecycles. Our long-term storage solution can be decoupled from the metrics collection, so we no longer have big bang releases.

- to apply divide and conquer to our metrics stack. Once the migration from Prometheus to OpenTelemetry has settled, we can rework our storage solution and no DevOps team will notice.

- to mitigate huge Prometheus instances that keep everything in memory.

- to easily test new features as it’s easy to spawn a new OpenTelemetry Collector with a tiny, independent configuration and no exporter.

- to integrate with third-party services.

But what do we pay for it? Earlier, we have already mentioned increased complexity, which means more configuration, more applications - more maintenance, more attack surface, more required knowledge. With complexity comes opportunity. Sneak peek: Our metrics stack has grown from one helm chart to more than 10, and we built an umbrella chart to deploy all in matching versions (keyword: ArgoCD).

We also need to talk about stability. OpenTelemetry is frequently evolving and cannot provide all the features you can get with specialized tools. We thought about replacing the Datadog agent, but the Datadog receiver does not support Datadog’s autodiscovery. In each component’s README, there’s a small table telling you which feature is stable, and which is in beta or even alpha phase. Depending on your case, you may be quite limited in what you can realistically use. So, before you run and gun, please do your research, check what you really need and see what’s already covered.

Enough fly-high theoretical exegesis. In the next part, we will get our hands dirty and start replacing Prometheus with OpenTelemetry. The first challenge will be to make OpenTelemetry Collector scrape CoreOS targets (

ServiceMonitorandPodMonitor), so we don’t need to touch any existing workload and can still leverage all those nice open-source helm charts that come with these resources.Credits

Title image by Addictive Creative on Shutterstock