In the previous blog post (Part 1: A Brief Introduction), we gave a theoretical overview of the OpenTelemetry project and mentioned our intention to replace Prometheus with OpenTelemtery because of its advantages.

Here, we will focus on the technical implementation of our monitoring stack and how we replace Prometheus’ metrics scraping with OpenTelemetry Collector. But before we delve into the technical details, it’s important to clarify where we’re coming from.

Initial situation: Our Prometheus-based metrics stack

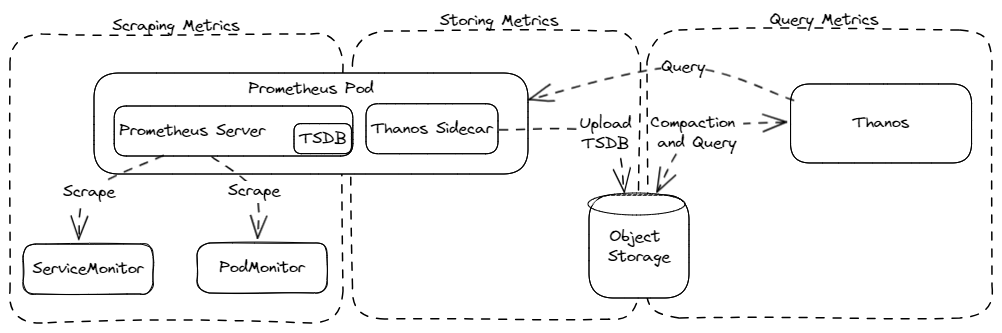

We start with a simple metrics stack based on generic Prometheus and enriched with Thanos for high availability and long term storage. As you can see below, we can divide our stack into three main functionalities: Scraping metrics, storing metrics, and querying metrics.

Scraping metrics

Prometheus uses two custom resource definitions, which are extensions to the Kubernetes API, to enable auto discovery of metrics endpoints while still allowing fine-grained configuration. These can be integrated into arbitrary Kubernetes workloads, e.g. helm charts, to tell Prometheus how to scrape metrics from a particular application. Today, many open source projects offer this for their helm charts.

A PodMonitor represents a custom resource that specifies how a group of pods is scraped for metrics. It declares pods that match the selector section as scrape targets. The podMetricsEndpoints setting allows you to specify more details about the corresponding endpoints, such as ports or HTTP paths. A fully functional PodMonitor can look as simple as this (source: Prometheus Operator):

apiVersion: monitoring.coreos.com/v1

kind: PodMonitor

metadata:

name: example-app

labels:

team: frontend

spec:

selector:

matchLabels:

app: example-app

podMetricsEndpoints:

- port: webSee the PodMonitorSpec section of the Prometheus Operator documentation for further specification details.

The second custom resource definition is called ServiceMonitor. Instead of matching pods directly, ServiceMonitors reference Kubernetes services and declare all pods supporting these services as scrape targets. Again, the selector is used to identify the desired services by label and the endpoints object allows for a more detailed configuration (see the corresponding docs). A small yet working minimal example of a ServiceMonitor is this (source: Prometheus Operator):

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: example-app

labels:

team: frontend

spec:

selector:

matchLabels:

app: example-app

endpoints:

- port: webIf you are interested in learning more about the difference between PodMonitor and ServiceMonitor, and when to use which, you can read this GitHub issue.

Storing metrics

After metrics are scraped, Prometheus stores those metrics in its local time-series database (TSDB). This data is kept for 15 days by default, as described in Prometheus operational aspects. Since we are running multiple instances of Prometheus for high availability, we need an additional solution to aggregate and maintain the data and make it available to users. There are many solutions in the Prometheus ecosystem that can be used, such as Thanos (our choice). Cortex or Grafana Mimir are two other popular options. They all integrate with Prometheus and use a remote object service such as AWS S3 for centralized storage.

Querying metrics and alerting

The stack needs a centralized query endpoint for further processing such as analysis (rules, alerts) and visualization (dashboards). This is important to work with all data, meaning in long-term storage and in distributed memory, when making business decisions or firing alerts about misbehaving applications. In our case, this is also covered by Thanos.

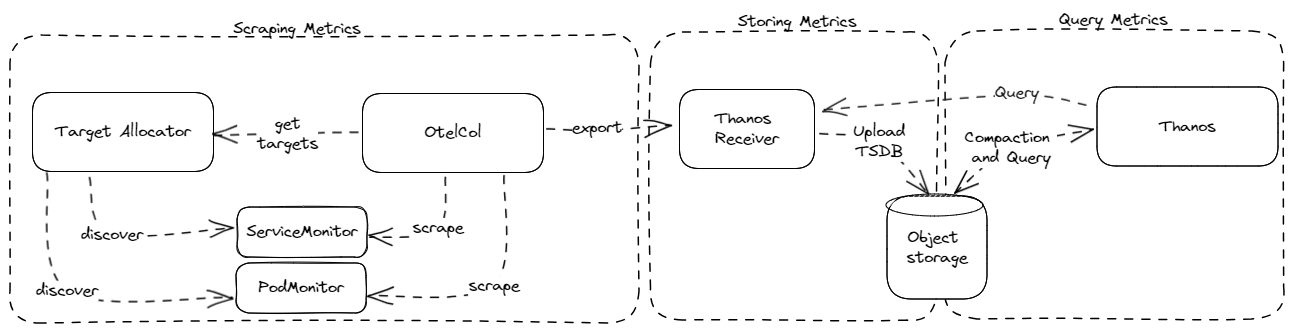

The new world: Our OpenTelemetry metrics stack

As mentioned in the previous post, one advantage of using OpenTelemetry is the ability to decouple the scraping part from the storage and query mechanisms. We replace Prometheus with OpenTelmetry Collector in the scraping part of the stack. To do one thing at a time, we keep the changes to the storing and querying part as minimal as possible. With the old stack, we added sidecars to the Prometheus pods to pull data into Thanos. With OpenTelemetry Collector, the pull approach changes to a push mechanism, and we needed a new way to accept data sent to Thanos. So, we added the optional Thanos Receiver component, as it accepts data via Prometheus Remote Write. This should be sufficient for data sink systems. If you need more information or options, please find a data sink of your choice from the existing OTelCol exporters.

Scraping metrics

OpenTelemetry Collector uses a different mechanism to work with the aforementioned Kubernetes CRDs. It decouples the discovery of the custom resources from the metrics collection capabilities of Prometheus, allowing them to scale independently. The OpenTelemetry project introduces an additional component called the Target Allocator.

The Target Allocator serves two functions:

- Discovery of Prometheus Custom Resources (ServiceMonitor and PodMonitor)

- Even distribution of Prometheus targets among a pool of Collectors

- OpenTelemetry Authors in Documentation: Target AllocatorThe Target Allocator is responsible for discovering scrape targets and offering them as a scrape configuration via an HTTP endpoint. The collector itself periodically polls the target allocator for available so-called jobs. This mechanism has been a feature of Prometheus, known as

http_sd_config. OpenTelemetry’s Prometheus receiver simply elevates this option as a critical step in its scraping process.Implementation details

We are finally going to get our hands dirty. We start with an up-and-running Kubernetes cluster, which contains the OpenTelemetry Operator and the required metrics CRDs. If you want to follow along with your own cluster, make sure to match this setup. For more information on installing the OpenTelemetry Operator, see the official documentation on installing via Helm.

Following the operator approach, we need to create a custom resource to tell the OpenTelemetry operator to deploy a collector. Here, it is called

OpenTelemetryCollector. The custom resource contains the configuration for both, specifically what to deploy and how it should behave. You can find the details of this and the other OTel operator CRDs as part of the official repository. Since the target allocator is an optional component, we will start building our custom resource by enabling the TA:apiVersion: opentelemetry.io/v1alpha1 kind: OpenTelemetryCollector metadata: name: o11y-metrics-collector namespace: o11y-metrics spec: mode: statefulset targetAllocator: enabled: true prometheusCR: enabled: trueThis configuration enables the deployment of the target allocator and makes it monitor the metrics’ custom resources. We also proactively set the deployment mode of the collector to

StatefulSet. While there are multiple modes, e.g.DaemonSetfor log collection, the combination of Prometheus receiver & target allocator only works with a StatefulSet. Unfortunately, this is not enough to make the target allocator work. In addition, we need to allow the target allocator to work with the resources from Kubernetes side - meaning RBAC. We do this by creating aClusterRoleand a correspondingClusterRoleBinding, which adds the necessary permissions to the ServiceAccounts created by the operator from our OpenTelemetryCollector resource. The OpenTelemetry project lists the minimum required permissions in their docs.apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: "metrics-collector-clusterrole" rules: - apiGroups: [""] resources: - nodes - nodes/proxy - nodes/metrics - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: ["monitoring.coreos.com"] resources: - servicemonitors - podmonitors verbs: ["get", "list", "watch"] - apiGroups: - networking.k8s.io resources: - ingresses verbs: ["get", "list", "watch"] - apiGroups: ["discovery.k8s.io"] resources: - endpointslices verbs: ["get", "list", "watch"] - nonResourceURLs: ["/metrics", "/metrics/cadvisor"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: "metrics-collector-clusterrolebinding" roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: metrics-collector-clusterrole subjects: - kind: ServiceAccount name: o11y-metrics-collector namespace: o11y-metrics - kind: ServiceAccount name: o11y-metrics-collector-targetallocator namespace: o11y-metricsAs mentioned in our Part 1: A Brief Introduction, OpenTelemetry Collector has 3 building blocks, receivers, processors and exporters, which will be used to construct our telemetry pipeline.

Receivers

The first building block of our pipeline, our receiver, collects telemetry data from various sources. The open-telemetry/opentelemetry-collector-contrib repository provides us with a variety of receivers to choose from, as listed in opentelemetry-collector-contrib/receivers. Since we are interested in receiving metrics from Prometheus-compatible endpoints, Prometheus Receiver is the way to go.

Prometheus Receiver supports prometheus scrape_config, which we will use to create a static job for self-scraping. In addition to that, we need to allow the receiver to query target allocator jobs. We also need to set a

collector_id. This is used to distribute jobs to multiple collectors, for example when using horizontal autoscaling. This is out of scope for now, though.spec: config: | receivers: prometheus: config: scrape_configs: - job_name: 'self' scrape_interval: 10s static_configs: - targets: [ '0.0.0.0:8888' ] target_allocator: endpoint: http://o11y-metrics-collector-targetallocator:80 interval: 30s collector_id: collector-0 [...]Processors

After successfully receiving metrics, processors proces the collected metrics before exporting them.

Similar to receivers, there is an extensive list of processors.

We will start by using Batch Processor to buffer metrics for traffic optimization. We also want to add the name of the collector itself as a label. We can do this with attributes Processor. When working with the Batch Processor, please note this recommendation about processor ordering:

It is highly recommended to configure the batch processor on every collector. The batch processor should be defined in the pipeline after thememory_limiteras well as any sampling processors. This is because batching should happen after any data drops such as sampling.- OpenTelemetry Authors in README: Batch Processorspec: config: | processors: batch: send_batch_size: 1000 timeout: 15s attributes: actions: - action: insert key: collector value: ${env:POD_NAME} [...]To use

POD_NAMEhere, we need to make it available as an environment variable. This is done as usual in Kubernetes - the configuration is passed directly down from the custom resource:spec: env: - name: POD_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.nameExporters

After our metrics are processed, exporters send the prepared metrics to the data sink of our choice. In our case, we use the

prometheusremotewriteexporter. For quick and independent validation, you can also use theprometheusexporter. It creates a scrapable endpoint at the OpenTelemetry Collector and exposes all collected and processed metrics there. It is important to note that the operator will not create aServiceMonitororPodMonitorfor this, so we don’t create an infinite scraping loop here.spec: config: | exporters: prometheusremotewrite: # this is specific to our environment endpoint: http://thanos-receiver:9091/api/v1/receive prometheus: # this is for demo purposes only endpoint: 0.0.0.0:8080 [...]Now, we are ready to connect the dots and build the pipeline section. The complete configuration is shown below:

apiVersion: opentelemetry.io/v1alpha1 kind: OpenTelemetryCollector metadata: name: o11y-metrics-collector namespace: o11y-metrics spec: mode: statefulset targetAllocator: enabled: true prometheusCR: enabled: true env: - name: POD_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: metadata.name config: | receivers: prometheus: config: scrape_configs: - job_name: 'self' scrape_interval: 10s static_configs: - targets: [ '0.0.0.0:8888' ] target_allocator: endpoint: http://o11y-metrics-collector-targetallocator:80 interval: 30s collector_id: collector-0 processors: batch: send_batch_size: 1000 timeout: 15s attributes: actions: - action: insert key: collector value: ${env:POD_NAME} exporters: prometheusremotewrite: # this is specific to our environment endpoint: http://thanos-receiver:9091/api/v1/receive prometheus: # this is for demo purposes only endpoint: 0.0.0.0:8080 service: pipelines: metrics: receivers: [prometheus] processors: [batch,attributes] exporters: [prometheusremotewrite,prometheus]Validating our scraping pipeline

We can verify the functionality of our stack in scraping metrics by comparing the metrics exposed by any ServiceMonitor and scraped by the OtelCol Prometheus receiver by confirming the presence of a specific metric on the exporter side of our pipeline. This is what we added the Prometheus exporter for. Obviously, we need to have some metric producers that we can scrape data from. For this, we choose kube-state-metrics. It exposes metrics about the state of Kubernetes objects, such as

kube_deployment_created. If you and your cluster are still with us, please check out the Kubernetes deployment section of the KSM repository.To verify, we simply port forward to kube-state-metrics with

$ kubectl --namespace o11y-metrics port-forward o11y-metrics-collector-collector-0 8080:8080and take a look at the KSM metrics:

$ curl http://localhost:8080/metrics | grep 'kube_deployment_created' kube_deployment_created{collector="o11y-metrics-collector-collector-0",container="kube-state-metrics",deployment="kube-state-metrics",endpoint="http",instance="100.124.227.255:8080",job="kube-state-metrics",namespace="default",pod="kube-state-metrics-766c44b7c5-6pm7t",service="kube-state-metrics"} 1.708012279e+09Here, we can see that we successfully scraped metrics by auto-discovering the kube-state-metrics endpoint and adding our collector name as a label to each metric. With this approach, you should be able to scrape the vast majority of your Prometheus-compatible metrics endpoints without touching them one by one. In the next blog post, we will cover another scraping method: Auto-discovering scrape targets through pod annotations. Stay tuned!

Bonus: Useful tips for getting started

If this is your first contact with OpenTelemetry, you should read the following topics. We have outlined a minimal configuration for scraping Prometheus metrics. The goal here was not to have a production-ready configuration.

memorylimiterprocessor. But we warned: It will deny data instead of going OOMKilled, so you need to have proper alerting in place.- Autoscaling: The OpenTelemetry Collector CRD provides

spec.autoscalerfor horizontal autoscaling. As the CRD implements theScaledObjectdefinition, you can also use VPA to scale your collector vertically.healthcheckextension: Add a health check endpoint to your collectors.Credits

Title image by Gumpanat on Shutterstock