Introduction

Whenever a new project team is formed or new members onboarded, the same challenge arises: each team member must establish a functional working environment on their machine to ensure productivity. This includes, but is not limited to

- Compilers and SDKs, such as Java Development Kit (JDK), Python, Rust, Go, Node.js in the correct version

- Access configuration files for clouds

- Build tools (make, gradle, maven)

- Local databases

- Various command line interfaces (e.g.

awsfor Amazon Web Services,azfor Microsoft Azure,ghfor GitHub,ngfor Angular and many more) - Cloud-native tools like kubectl and k9s for dealing with kubernetes clusters

- Integrated development environments (e.g. Visual Studio Code, IntelliJ IDEA)

- Container engines and local Kubernetes clusters (e.g. Rancher Desktop)

The need for complex and extensive toolchains results in a long and error-prone setup phase before a team can start working on the actual project. Onboarding new team members may even lead to more problems because the new member might install different versions of some of the tools than the other team members.

If - and this isn’t uncommon these days - a team member works on different projects things get even worse, as different projects may use incompatible versions of the same software. For example one team may be using Java 21, while an other team may need to support an older version for backward compatibility. Thus a person contributing to both projects has to install both JDKs on his or her machine and switch between the projects by changing the value of the JAVA_HOME and PATH environment variables.

Another aspect of setting up the environment is the configuring the access to the different stages locally and in the cloud: URIs of different cloud environments and repositories, database credentials and many other settings needss to be managed.

Options

In an ideal world, every single team member would simply check out the project’s repository and would be able to create the first successful pull request on the same day. We will see, how DevContainers can get us very close to this.

But before we dig a little deeper, let’s discuss some alternative approaches and their pros and cons.

Some options for addressing the problem are:

- Virtual machines: When developer laptops became powerful enough and virtualization tools like VMWare Player and later VirtualBox became popular about 20 years ago, the idea of putting complex development setups into a virtual machine image was used in proejcts from time to time. With this approach, all the tools needed are put into a VM image and team members use an instance of this image.

- Cloud-based environments: Instead of running virtual machines locally one could use project specific VDIs (Virtual Desktop Infrastructure) like Amazon WorkSpaces or cloud-based development environments like GitPod.

- Package managers like Nix or tox allow to manage different versions of the required tools in parallel and would help to address the problem of different versions of the same tool, but are only solving one aspect of the problem

- DevContainers: DevContainers combine modern technologies such as containers and modular, extensible integrated develoment environments (IDEs) such as Visual Studio Code to create reproducible and consistent development environments by code.

Pros and Cons

| Pros | Cons | |

|---|---|---|

| Virtual machines |

|

|

| Cloud-based environments |

|

|

| Package managers |

|

|

| DevContainers |

|

|

DevContainers

In recent years the idea of defining things “as code” has proven to have many advantages over traditional manual configuration, especially when we think about “infrastructure as code”: The entire configuration is defined in text files which are stored in an SCM (software configuration management) system like git, allowing an environment (e.g. a staging environment) to be “cloned” in a consistent and reproducible way.

With the popularity of containers we can set up complex development environments for our applications locally using docker compose, but we could not manage the tools to build our software in a similarly simple way.

DevContainers use container technology in a similar way as “docker compose” or “infrastructure as code”: The entire tool set of a development environment is described in a configuration file (devcontainer.json) which is stored in the .devcontainer folder of a project’s source code repository. The environment defined in this way is then executed in an IDE with DevContainer support (e.g. Visual Studio Code or VS Code for short) and is available in two ways:

- The CLI tools (e.g. “aws”, “jq”, “kubectl” or others) installed in the devcontainer as described in

devcontainer.jsonare available in the integrated terminal window of the IDE. - The IDE itself also runs partly in the containers, so that IDE extensions will be available in the IDE when it is connected to the devcontainer.

When a new tool is required, it is sufficient to update the configuration of the DevContainer. When a team member updates the configuration from the SCM the IDE will notice, that the configuration has been updated and will start a rebuild of the DevContainer.

There are several ways to add new software to a DevContainer:

- Add a predefined “feature” to the devcontainer.json. The list of predefined features is quite long and contains a lot of software and tools from Anaconda, AWS CLI and Azure CLI to Zig (a new programming language).

- Use a Dockerfile to modify the base image of the container. This approach is especially useful when the required software is not available as a predefined feature or if some more advanced configurations are required.

Integration

When the IDE is connected to the DevContainer the directories containing the source code checked out from a software configuration management (SCM) system like git and the build artifacts still reside on the host machine whereas the local Maven and npm repositories are stored in a persistent container volume, so that their contents are not lost when the container is stopped.

Simple example

The best way to demonstrate the power of DevContainers is with a practical example.

Let’s assume that we need to maintain a legacy project from time to time which has a Java 8 codebase and cannot be migrated to a newer version. The legacy application is a simple Spring Boot application built with Maven that exposes a REST service on port 8080.

Without DevContainers one would have to install the required outdated JDK (Java Development Kit) and always check the system-wide environment variables like JAVA_HOME and PATH to make sure the correct JDK is being used.

Using DevContainers the correct JDK is automatically available when one opens the respective project with an IDE that supports DevContainers.

Prerequisites

In order to follow the example, you will need the following prerequisites (assuming a Windows 10 or Windows 11 machine):

- Docker Runtime (e.g. Rancher Desktop)

- git (including git-bash)

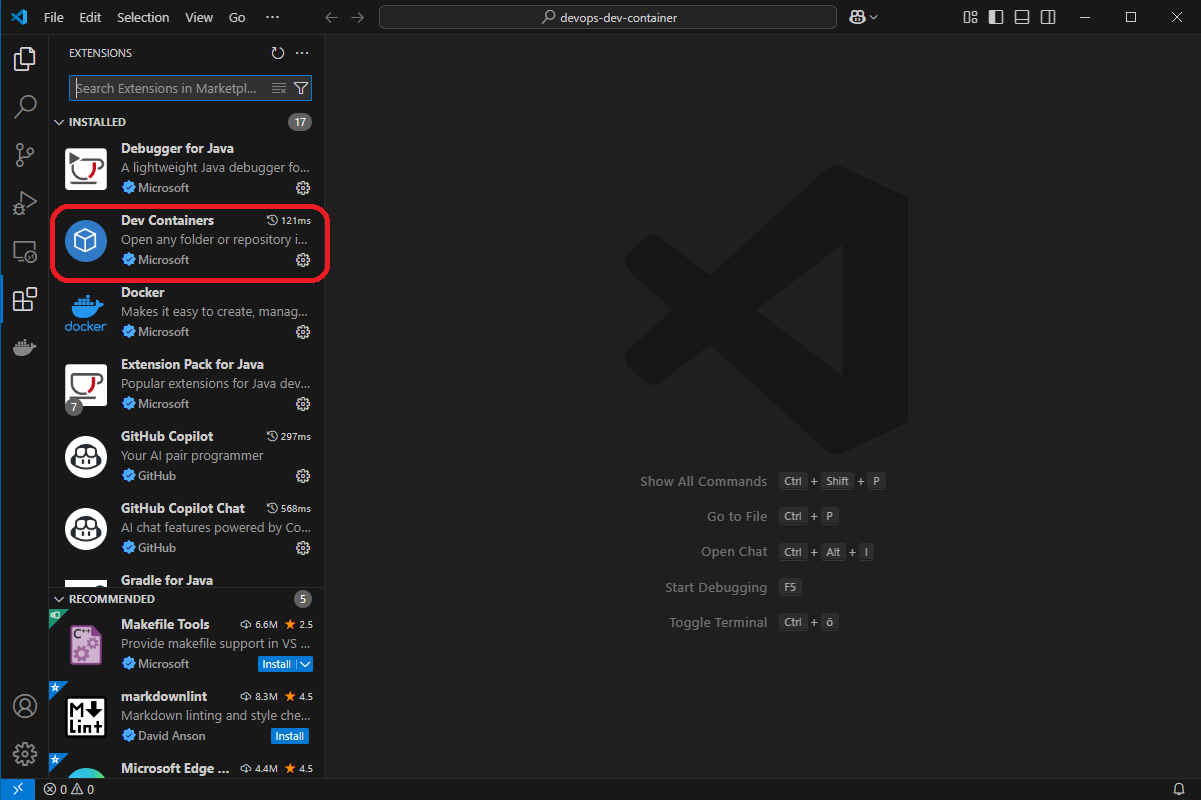

- Visual Studio Code (“VS Code” for short) with DevContainer extension

Test your setup

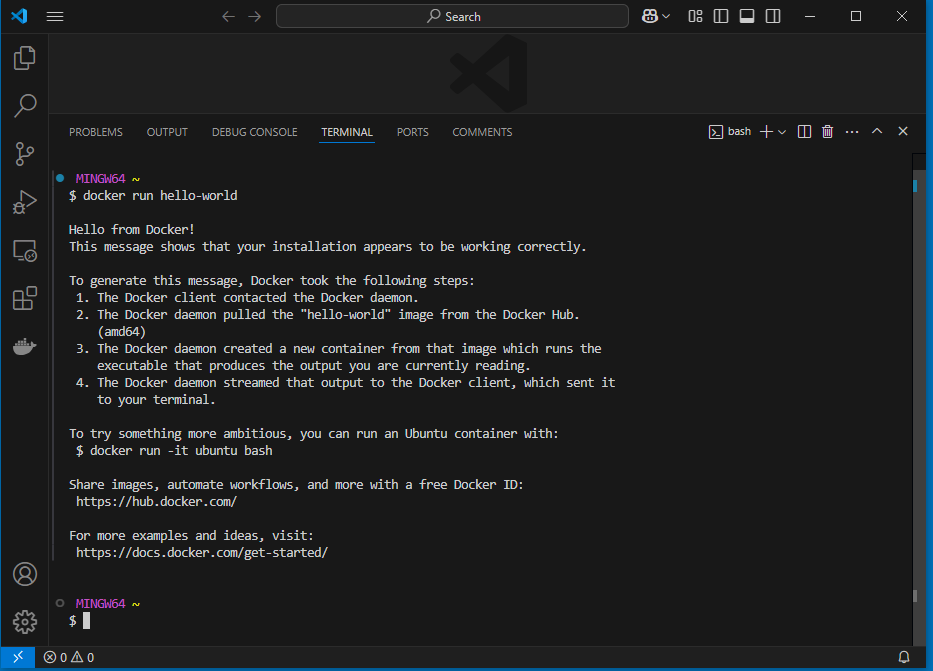

To make sure everything is working, you should be able to run the docker hello-world image in a terminal window in VS Code:

docker run hello-world

You should see, something like this

Specify the DevContainer for the legacy application

In the repository of the legacy application we create a folder .devcontainer with the following file devcontainer.json:

{

"name": "Java 8",

"image": "mcr.microsoft.com/devcontainers/java:dev-8-jdk-bookworm",

"features": {

"ghcr.io/devcontainers/features/java:1": {

"version": "8",

"installMaven": "true",

"installGradle": "false"

}

}

}

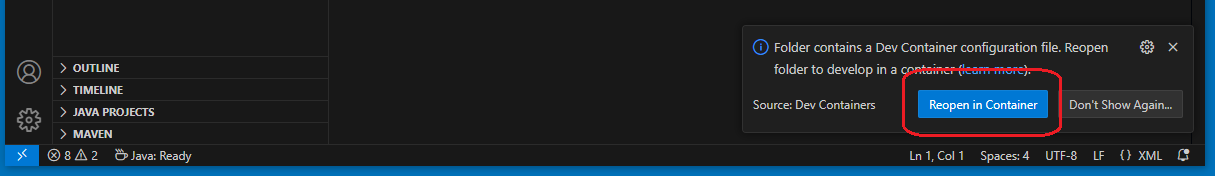

When we open the repository in a new VS Code window, VS Code should recognize the .devcontainer folder and offer the option to reopen the project in a container:

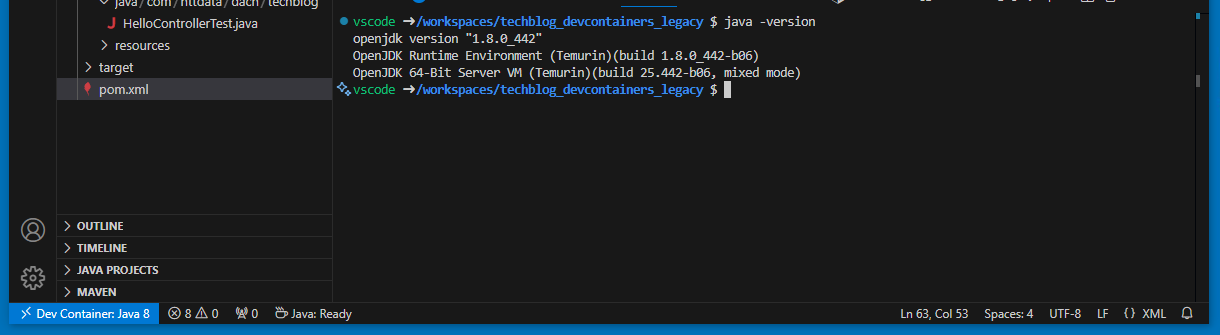

VS Code will now build and launch the container. When the container is ready, you will notice that the icon in the status bar has changed:

![]()

When we now open a terminal in VS Code there is an option for a “bash” (because the container is based on a Linux OS) and when we execute

java -version

in this shell we get the information that Java 1.8 is installed:

Now we run

mvn clean install

in the bash terminal inside VS Code to build our legacy application.

When we start the application with

java -jar target/legacy-1.0-SNAPSHOT.jar

the IDE offers us to open the URL “http://localhost:8080” in a browser, so that the application running inside the DevContainer can be tested from the host machine.

If more control is required or ports need to be mapped this can be done either manually (Tab Ports) or in the devcontainer.json file.

Advanced example

In the simple example above we just used a Java image and specified an option to install Apache Maven as a build tool.

In the advanced example we will use a Dockerfile to provide not only predefined features, but also some configuration files for AWS CLI login.

Before we start with the advanced example, let’s shed some light on the authentication and authorization meachnisms used by the AWS CLI:

When using the AWS CLI the following information is required to access an AWS account in a particular region (see also AWS Documentation ):

- Access key

- Secret access Key

- Account ID

- Region

- Role to assume

This information can be provided in environment variables (AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and so on), but the more common option is, to use a config file in the .aws folder (located in the user’s home directory) which looks like this:

[profile my-individual-profile-name]

sso_session=my-individual-sso-session-name

sso_account_id=123456789011

sso_role_name=AdminRole

region=eu-central-1

output=json

Without DevContainers, each team member must maintain the .aws/config file and may even use individual names for the profiles.

With DevContainers, the .aws/config file can become part of the container image and aws commands like

aws --profile common-profile-name s3 ls

will work right out of the box in every team member’s IDE.

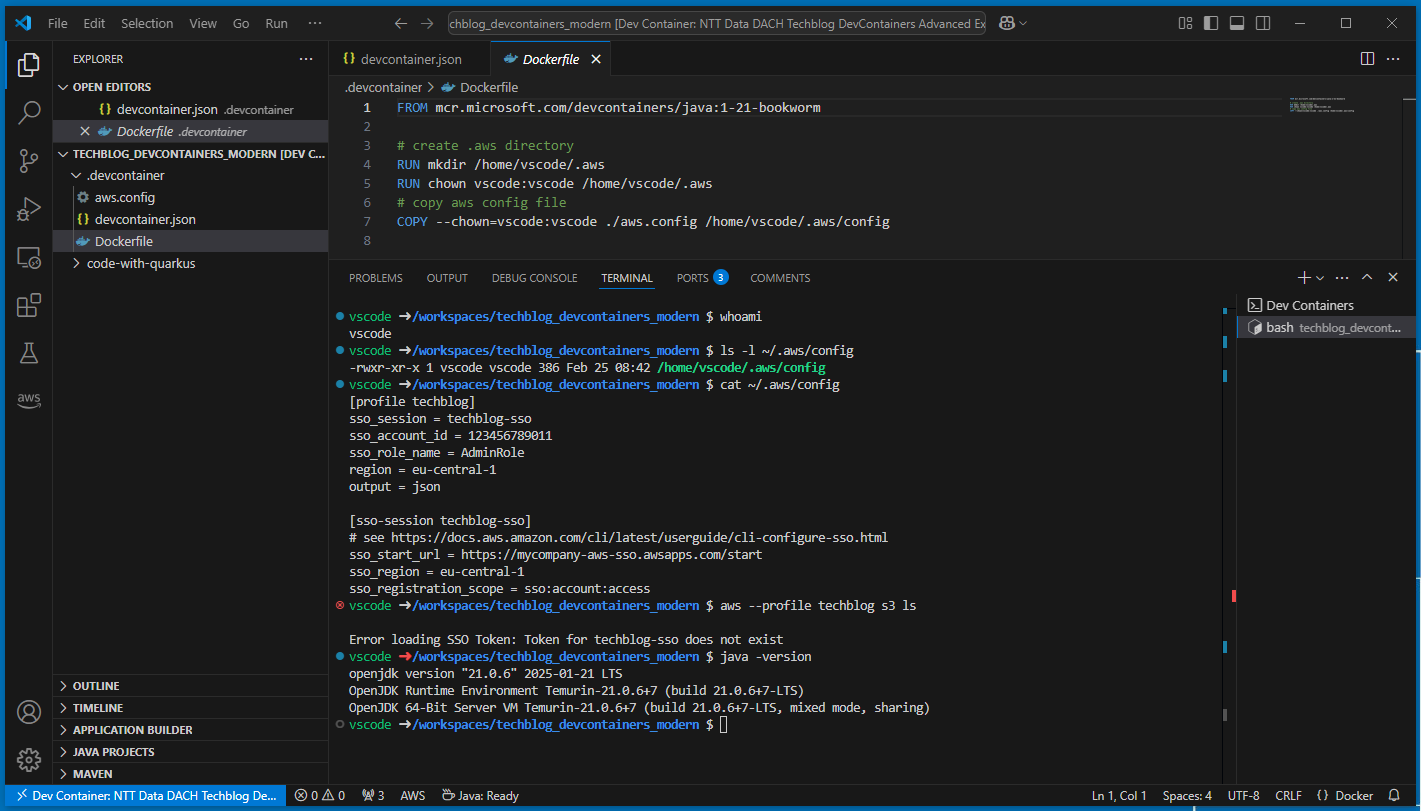

For the advanced example, we use the following directory strucure:

.devcontainer

├── aws.config

├── devcontainer.json

└── Dockerfile

The content of the devcontainer.json is:

{

"name": "NTT Data DACH Techblog DevContainers Advanced Example",

"build": {

"dockerfile": "Dockerfile"

},

"features": {

"ghcr.io/devcontainers/features/java:1": {

"version": "21",

"installMaven": "true",

"installGradle": "false"

},

"ghcr.io/devcontainers-extra/features/quarkus-sdkman": {

"version": "latest"

},

"ghcr.io/devcontainers/features/aws-cli": {}

},

"forwardPorts": [ 5005, 8080 ]

}

With this devcontainer.json we refer to the Dockerfile in the .devcontainer folder to build the base image. Additionally we add the following features:

- Java JDK 21, including Apache Maven

- AWS CLI

- Quarkus CLI

The Dockerfile reads:

FROM mcr.microsoft.com/devcontainers/java:1-21-bookworm

# create .aws directory

RUN mkdir /home/vscode/.aws

RUN chown vscode:vscode /home/vscode/.aws

# copy aws config file

COPY --chown=vscode:vscode ./aws.config /home/vscode/.aws/config

The actions in the Dockerfile are for copying the contents of the aws.config file (in the .devcontainer folder) to ~/.aws/config in the container.

Finally, the aws.config file defines the profile “techblog” for a (non existing) AWS account 123456789011 and a single-sign-on (SSO) configuration.

After the new container is built we can use the AWS CLI from within the container like this

aws --profile techblog s3 ls

In this case it fails, because we did not perform the aws sso login command and the account we used for the demo does not exist, but the principle should have become clear.

Conclusion

With DevContainers it is possible to define and maintain a consistent set of tools (like compilers, SDKs, CLIs) and configurations as code without the neeed to execute and maintain heavy virtual machine images or use cloud-based development environments. This makes scenarios like setting up training environments or onboarding new team members much easier. It also helps to recreate the setup used for build a specific software release in a reproduceable and consistent manner.